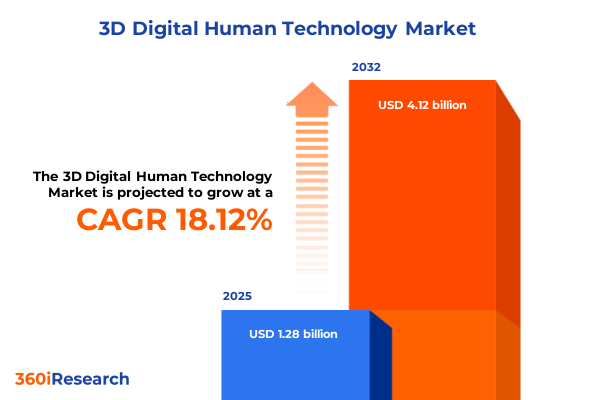

The 3D Digital Human Technology Market size was estimated at USD 1.28 billion in 2025 and expected to reach USD 1.47 billion in 2026, at a CAGR of 18.12% to reach USD 4.12 billion by 2032.

Setting the Stage for the Human-Centric Digital Revolution: Foundations and Context for 3D Digital Human Technology

The evolution of 3D digital human technology represents a profound shift in how organizations and consumers engage with virtual environments. By blending high-fidelity graphics, real-time rendering, and advanced artificial intelligence, digital humans have transcended their origins in gaming and animation to become integral interfaces for education, healthcare, retail, and enterprise applications. This introductory overview underscores how these sophisticated avatars are reshaping user expectations around personalization, empathy, and immersion, bridging the gap between the physical and digital worlds.

As organizations strive to differentiate their customer experiences and streamline operations, digital humans offer a unique combination of scalable interactivity and human-like responsiveness. Early adopters have demonstrated that embedding digital personas into customer service, training simulations, and virtual showrooms not only elevates engagement but also reduces costs associated with traditional labor models. With generative AI models now capable of creating lifelike expressions, gestures, and dialogue, these virtual agents can convey empathy and adapt dynamically to individual user behaviors, setting a new standard for digital interaction.

Moving forward, the confluence of neural rendering techniques and multimodal AI-integrating motion capture, facial recognition, and voice-driven emotion analysis-will further narrow the uncanny valley. Platforms like Epic Games’ MetaHuman Creator have embedded high-detail character creation directly into development pipelines, democratizing access to professional-grade avatars and accelerating time to market. As these capabilities mature, digital humans will evolve from niche experiments into mission-critical assets for brands and institutions seeking to deliver hyper-personalized, empathetic experiences at scale.

Navigating the Convergence of AI, Rendering, and Interactive Avatars Reshaping 3D Digital Human Experiences Today

The landscape of 3D digital human technology is undergoing transformative shifts driven by the integration of emotion AI, advancements in rendering engines, and the proliferation of cloud-native deployment models. Emotion AI modules now leverage deep learning to interpret facial microexpressions and vocal intonations in real time, enabling virtual characters to respond with contextual empathy rather than scripted responses. This leap in authenticity has catalyzed a wave of use cases in mental health support, telemedicine, and virtual customer service, where the perceived emotional intelligence of an avatar has a direct impact on user trust and engagement obtained from Soul Machines’ work with healthcare education platforms.

Simultaneously, neural rendering frameworks such as GAN-based pipelines and Neural Radiance Fields (NeRFs) are redefining how digital humans are generated and animated. By synthesizing photorealistic textures and geometry from sparse input data, these methods drastically reduce production time and hardware requirements. Unreal Engine’s recent 5.6 release exemplifies this trend, embedding MetaHuman Creator into its core engine and enabling full-body authoring and marketplace distribution, thereby empowering developers to create, share, and monetize digital human assets across multiple platforms.

Furthermore, the move toward cloud-first architectures is reshaping deployment and scalability. Organizations are embracing hybrid and on-premises delivery alongside cloud-based services to balance latency needs, data privacy concerns, and cost considerations. This flexibility is essential for industries such as finance and government, where regulatory compliance and secure data handling are paramount. As compute-intensive tasks migrate to distributed GPU clusters and edge compute nodes, enterprises can deliver consistent digital human experiences across diverse endpoints-from mobile AR applications to large-scale virtual event stages.

Assessing the Broad Spectrum Effects of 2025 U.S. Tariff Policies on 3D Digital Human Technology Supply Chains and Costs

The U.S. administration’s imposition of reciprocal tariffs in 2025 has introduced new complexities into global supply chains for 3D digital human technologies. Key hardware components-such as high-resolution camera systems, specialized graphics processors, and sensor arrays-now face additional duties, increasing procurement costs and extending lead times. Analysis indicates that a 20 percent levy on semiconductor imports has placed pressure on companies reliant on advanced GPUs, while a 25 percent tariff on optical motion-capture systems has disrupted traditional hardware sourcing channels.

These elevated costs cascade through the value chain, prompting service providers and software vendors to reevaluate their pricing models. For example, video production platforms that generate synthetic spokespeople are now incorporating surcharges to offset the higher expense of real-time rendering hardware. As consumer electronics firms grapple with average price hikes-documented as a 31 percent increase for smartphones and a 69 percent rise in console prices-development studios and digital content providers may confront a reduction in short-term adoption rates due to budget constraints.

In addition to direct cost pressures, the risk of retaliatory tariffs has heightened market volatility. A recent study projects a potential contraction of U.S. ITA exports by up to $56 billion if countermeasures are enacted, particularly affecting software modules and AI engines shipped to key trading partners. Such uncertainty incentivizes firms to diversify supply chains, accelerate domestic manufacturing initiatives under initiatives like the CHIPS Act, and embrace modular software architectures that can be localized to specific regions without violating tariff regulations.

Decoding the Multifaceted Segmentation Landscape That Drives Adoption and Innovation in the 3D Digital Human Ecosystem

In examining the 3D digital human market through a segmentation lens, distinct patterns emerge across application domains that reveal unique drivers of adoption. Advertising and marketing applications leverage interactive campaign tools and virtual showrooms to elevate brand storytelling, while education and training scenarios rely on skill simulations and virtual classrooms to deliver repeatable, risk-free experiences. Film and entertainment stakeholders harness animation, live events, and virtual production to craft immersive narratives that resonate with global audiences. Gaming developers optimize for console, mobile, and PC experiences, tailoring avatar fidelity and interaction complexity to platform capabilities. Healthcare providers deploy surgical simulation and telemedicine avatars to enhance patient outcomes and operational efficiency. Retail and e-commerce platforms integrate interactive catalogs and virtual try-on solutions to bridge the gap between online discovery and purchase confidence.

From a technology perspective, market participants select from emotion AI frameworks-spanning facial expression analysis to voice-based sentiment detection-and deploy facial recognition infrastructures based on two-dimensional or full three-dimensional models. Motion capture systems vary from inertial sensors to magnetic suites and optical configurations, with marker-based and markerless techniques catering to different levels of studio investment and mobility requirements. Neural rendering approaches, including GAN-based pipelines and radiance-field reconstructions, are complemented by classical three-dimensional modeling methods such as NURBS surfaces and polygonal meshes, enabling a broad spectrum of visual quality and computational efficiency.

Component segmentation underscores the interdependence of hardware, software, and services. Camera arrays, graphics processors, and storage devices form the hardware backbone. AI modules, animation engines, rendering pipelines, SDKs, and modeling suites comprise the software ecosystem, while integration consultancy and maintenance support services ensure seamless implementation and continuous optimization. Organizations may choose cloud-based delivery for elastic scalability, hybrid solutions to balance performance with data governance, or on-premises installations to meet stringent security mandates. Finally, end-user contexts span academic and research institutions, consumer segments including gamers and social media users, and enterprise verticals across education, healthcare, media and entertainment, and retail. Immersive contexts extend from augmented reality overlays to fully realized virtual reality worlds, with resolution modes ranging from high-fidelity pre-rendered sequences to real-time interactive streams.

This comprehensive research report categorizes the 3D Digital Human Technology market into clearly defined segments, providing a detailed analysis of emerging trends and precise revenue forecasts to support strategic decision-making.

- Application

- Technology

- Component

- Delivery Mode

- End User

- Immersive Context

- Resolution Mode

Unearthing Regional Dynamics and Growth Drivers Across the Americas, EMEA, and Asia-Pacific in the 3D Digital Human Market

Regional dynamics in the 3D digital human market reflect both the maturity of technology ecosystems and the unique strategic priorities of different areas. In the Americas, North American organizations lead through deep investments in cloud infrastructure, strong regulatory frameworks around biometric data, and a dense network of technology partners ranging from motion-capture studios to speech analytics vendors. This environment accelerates pilot-to-scale transitions, particularly in retail, finance, and streaming media, where digital avatars augment customer touchpoints and automate routine interactions.

In Europe, Middle East & Africa, growth is anchored in innovation clusters characterized by automotive manufacturing, luxury retail, and financial services. The European Union’s forthcoming AI Act is driving vendors to incorporate transparent watermarking and audit trails in digital-human deployments, positioning compliance readiness as a differentiator in public tenders and enterprise contracts. Meanwhile, sovereign investment in tech hubs from the Gulf Cooperation Council and targeted R&D grants in Eastern Europe are expanding use cases for virtual customer assistants in tourism, smart city interfaces, and cross-border e-commerce platforms.

Asia-Pacific stands out for its rapid adoption, fueled by government stimulus for AI infrastructure, private-sector funding in consumer-electronics research parks, and culturally embedded applications such as virtual idols and holographic lecturers. China’s tech giants are incorporating real-time facial and gesture recognition into live-stream shopping experiences, while South Korean and Japanese universities pilot mixed-reality classrooms that merge physical campus environments with metaverse portals. These region-specific innovations create feedback loops that enrich local data sets and reinforce rapid scale-up of digital human solutions.

This comprehensive research report examines key regions that drive the evolution of the 3D Digital Human Technology market, offering deep insights into regional trends, growth factors, and industry developments that are influencing market performance.

- Americas

- Europe, Middle East & Africa

- Asia-Pacific

Highlighting Industry Pioneers and Visionary Enterprises Leading Innovation in 3D Digital Human Technology Across Sectors

A diverse ecosystem of technology vendors, startups, and established enterprises is shaping the trajectory of 3D digital human innovation. Epic Games, with its MetaHuman Creator embedded in Unreal Engine 5.6, empowers developers with parametric body and facial authoring tools and a marketplace for asset trading across game engines and creative suites. Soul Machines leads in autonomously animated digital people, combining experiential AI with emotional intelligence modules to simulate human-like interactions in healthcare education and customer service settings.

Hour One specializes in cloud-based synthetic characters derived from real human likenesses, enabling enterprises to scale video production for e-commerce, training, and communications without live actors. Genies, an AI avatar platform, offers personalized 3D avatars for social media, branded experiences, and SDK integrations, fueling user-generated content ecosystems inside messaging and creative applications. Synthesia, now valued at $2.1 billion, leverages generative AI to produce lifelike video avatars and voiceovers, positioning itself as a leader among AI-driven media companies in Europe and North America.

Beyond pure-play digital human vendors, robotics firms such as Furhat Robotics and Unith expand the frontier by embedding conversational avatars into embodied social robots, offering multimodal interaction for customer engagement, education, and research applications. BioDigital brings 3D human anatomy visualization to life, supporting healthcare professionals and educators with interactive anatomical maps that democratize access to life science insights. Together, these innovators form a collaborative network driving continuous improvements in performance, accessibility, and real-world impact.

This comprehensive research report delivers an in-depth overview of the principal market players in the 3D Digital Human Technology market, evaluating their market share, strategic initiatives, and competitive positioning to illuminate the factors shaping the competitive landscape.

- Adobe Inc.

- Apple Inc.

- Autodesk, Inc.

- DeepBrain AI

- Didimo, Inc.

- Epic Games, Inc.

- Google LLC

- Hour One AI

- IBM Corporation

- Microsoft Corporation

- NVIDIA Corporation

- ObEN, Inc.

- Pinscreen, Inc.

- Reallusion Inc.

- Samsung Electronics Co., Ltd.

- Soul Machines Limited

- Synthesia Ltd.

- UneeQ Limited

- Unity Technologies

Charting a Strategic Path Forward with Actionable Recommendations for Industry Leaders Embracing 3D Digital Human Solutions

Industry leaders seeking to leverage 3D digital human technologies must align strategic priorities with technical capabilities and evolving user expectations. First, organizations should audit their customer journey touchpoints to identify high-impact scenarios-such as virtual sales consultations, employee training programs, or remote patient monitoring-where digital humans can deliver measurable benefits. Conducting controlled pilot programs with clear success metrics accelerates stakeholder buy-in and uncovers integration challenges early.

Second, embracing modular architectures and open standards ensures future flexibility. By selecting solutions that support common SDKs, cloud APIs, and interoperability with leading game engines or visualization platforms, companies mitigate vendor lock-in and streamline cross-functional workflows. Co-development partnerships with specialized vendors-such as motion-capture studios, AI model providers, or ergonomic design firms-can unlock deeper customization and reduce time-to-market.

Third, investing in upskilling internal teams is essential. Cross-disciplinary training in emotional AI, real-time rendering, and user experience design fosters a culture of experimentation and rapid iteration. Organizations should consider establishing internal centers of excellence to curate best practices, conduct ethical reviews, and steward digital-human assets throughout their lifecycle. Finally, as regulations around biometrics and synthetic media tighten globally, enterprises must institute robust governance frameworks for data privacy, security, and transparency, embedding audit trails and user consent mechanisms into every deployment.

Outlining a Rigorous Research Methodology That Ensures Robust Insights into 3D Digital Human Market Dynamics and Trends

This analysis draws on a mixed-methods research approach combining primary interviews, secondary literature reviews, and supply chain assessments. Primary research included structured interviews with executive stakeholders from leading digital-human vendors, developer surveys capturing integration challenges, and workshops with end users across healthcare, retail, and entertainment to validate user experience assumptions. Secondary research encompassed a comprehensive review of industry publications, technology roadmaps, and policy frameworks to contextualize regulatory developments and emerging standards.

Quantitative data were triangulated from public company disclosures, open-source repositories of motion-capture benchmarks, and anonymized cloud infrastructure usage reports, ensuring a robust empirical foundation. Supply chain sensitivity analyses modeled the impact of tariff scenarios on component lead times and cost structures, drawing from tariff schedules and trade databases. Throughout, the research adhered to ethical guidelines for confidentiality and data security, with anonymized data points aggregated to protect proprietary information. This rigorous methodology ensures that the insights and recommendations reflect both the current state and future trajectories of the 3D digital human market.

This section provides a structured overview of the report, outlining key chapters and topics covered for easy reference in our 3D Digital Human Technology market comprehensive research report.

- Preface

- Research Methodology

- Executive Summary

- Market Overview

- Market Insights

- Cumulative Impact of United States Tariffs 2025

- Cumulative Impact of Artificial Intelligence 2025

- 3D Digital Human Technology Market, by Application

- 3D Digital Human Technology Market, by Technology

- 3D Digital Human Technology Market, by Component

- 3D Digital Human Technology Market, by Delivery Mode

- 3D Digital Human Technology Market, by End User

- 3D Digital Human Technology Market, by Immersive Context

- 3D Digital Human Technology Market, by Resolution Mode

- 3D Digital Human Technology Market, by Region

- 3D Digital Human Technology Market, by Group

- 3D Digital Human Technology Market, by Country

- United States 3D Digital Human Technology Market

- China 3D Digital Human Technology Market

- Competitive Landscape

- List of Figures [Total: 19]

- List of Tables [Total: 4134 ]

Synthesizing Core Findings and Strategic Implications to Conclude on the State of 3D Digital Human Technology Adoption

As 3D digital human technology matures, the convergence of generative AI, neural rendering, and immersive deployment platforms is setting a new paradigm for human–machine interaction. Organizations that strategically integrate digital humans into high-impact use cases-while maintaining modular architectures and rigorous governance-are poised to gain competitive advantage in customer engagement, workforce training, and operational efficiency.

Regional dynamics underscore the importance of localized strategies: North American enterprises benefit from established cloud ecosystems and clear regulatory guidance; EMEA firms gain traction through compliance-driven innovation and sovereign investment; Asia-Pacific leaders accelerate adoption via government incentives and culturally resonant applications. Navigating tariff uncertainties requires supply chain diversification and software localization to maintain cost competitiveness.

By collaborating with pioneering vendors, investing in internal expertise, and piloting targeted implementations, industry leaders can transform digital-human solutions from experimental proofs of concept into scalable, value-driving assets. This executive summary provides a comprehensive foundation for informed decision-making, enabling stakeholders to chart a clear roadmap toward realizing the full potential of 3D digital human technology.

Connect with Ketan Rohom to Secure Your Definitive Market Intelligence Report on 3D Digital Human Technology Today

To gain comprehensive, data-driven insights and strategic guidance tailored to your organizational needs in the rapidly evolving field of 3D digital human technology, we invite you to connect with Ketan Rohom, Associate Director of Sales & Marketing. Ketan specializes in helping decision-makers access critical market intelligence that empowers informed investment, partnership, and innovation strategies. Reach out today to secure your definitive market research report and position your enterprise at the forefront of this transformative industry.

- How big is the 3D Digital Human Technology Market?

- What is the 3D Digital Human Technology Market growth?

- When do I get the report?

- In what format does this report get delivered to me?

- How long has 360iResearch been around?

- What if I have a question about your reports?

- Can I share this report with my team?

- Can I use your research in my presentation?