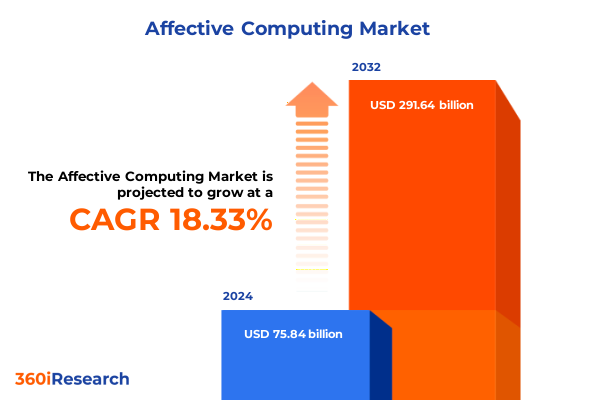

The Affective Computing Market size was estimated at USD 88.30 billion in 2025 and expected to reach USD 102.83 billion in 2026, at a CAGR of 18.61% to reach USD 291.64 billion by 2032.

Pioneering the Dawn of Emotional Intelligence in Technology Through Comprehensive Overview of Affective Computing Fundamentals and Strategic Relevance

The realm of affective computing lies at the crossroads of emotional intelligence and digital innovation, representing a paradigm shift in how machines interpret and respond to human emotions. This intricate field integrates computer vision, natural language processing, physiological signal analysis, and acoustic modeling to create systems capable of recognizing and adapting to human affective states. As organizations endeavor to humanize digital interactions, affective computing emerges as a cornerstone technology, enabling personalized user experiences, enhancing workplace safety, and refining customer engagement strategies.

Recent advancements in sensor technologies and artificial intelligence algorithms have propelled affective computing beyond theoretical frameworks into real-world applications. Breakthroughs in deep learning architectures now facilitate the accurate detection of micro-expressions, subtle voice inflections, and physiological markers, driving the development of holistic emotion recognition platforms. These innovations are powering next-generation solutions across industries, where understanding the emotional context of user behavior translates into higher satisfaction, improved productivity, and more effective decision-making.

Looking ahead, the convergence of multimodal data fusion and edge computing promises to unlock new frontiers in real-time emotion analytics. Organizations poised to harness these capabilities will be uniquely positioned to foster empathetic user interfaces, accelerate therapeutic interventions in healthcare, and redefine personalized marketing in retail. This introduction sets the stage for a comprehensive exploration of the transformative forces shaping affective computing, charting its trajectory from conceptual foundations to strategic imperatives.

Unveiling the Convergence of Artificial Intelligence and Sensor Innovations That Redefine Emotional Recognition Capabilities

Over the past decade, affective computing has undergone a transformative evolution driven by advances in artificial intelligence, sensor integration, and data analytics. Early efforts focused primarily on isolated channels such as facial expression recognition or voice tone analysis. Today, multimodal emotion recognition systems seamlessly combine visual, auditory, and physiological inputs to yield a more nuanced understanding of human affective cues.

Moreover, the rise of deep learning frameworks has enabled context-aware emotion inference, allowing systems to interpret emotional signals within real-world environments. This shift has been further catalyzed by the proliferation of wearable devices equipped with advanced sensors capable of tracking biometric data, and by the integration of natural language processing techniques that map text-based emotional nuances. Together, these developments have ushered in a new era where continuous emotion monitoring and adaptive feedback loops redefine user engagement.

As businesses increasingly prioritize customer experience, affective computing has become a strategic differentiator across sectors. Organizations are embedding emotion recognition into virtual assistants, autonomous vehicles, and telehealth platforms to deliver empathetic interactions. Simultaneously, the ethical discourse around data privacy has fostered the emergence of privacy-by-design frameworks, ensuring that emotionally intelligent systems maintain user trust. This section analyzes the cumulative impact of these shifts, revealing a landscape in flux and brimming with opportunity for forward-thinking innovators.

Assessing the Multifaceted Consequences of Newly Imposed United States Tariffs on Core Affective Computing Components

In 2025, the United States enacted a new tranche of tariffs targeting critical components integral to affective computing systems, including high-precision cameras, advanced microphones, specialized sensors, and select semiconductors. These measures, aimed at rebalancing trade relations and fostering domestic manufacturing, have collectively altered the cost structures underpinning hardware procurement and production strategies.

The increased duties on camera modules and wearable device components have prompted suppliers to reassess their manufacturing footprints, leading many to diversify assembly operations beyond traditional export hubs. This realignment has accelerated investments in North American production facilities, with some sensor manufacturers announcing partnerships to establish localized fabrication lines. Consequently, while tariff-driven cost pressures have elevated upfront expenditures, the long-term effect has been the emergence of more resilient and geopolitically diversified supply chains.

Simultaneously, software-centric providers have capitalized on rising hardware costs by optimizing their platforms for lower-resolution sensors and enhancing algorithmic efficiency to maintain performance thresholds. This adaptive approach has not only mitigated the immediate impact of tariff-induced price increases but has also spurred innovation in edge computing, enabling on-device emotion analysis that reduces latency and bandwidth demands. Together, these dynamics underscore the duality of tariff regimes as both a challenge and a catalyst for strategic realignment within the affective computing ecosystem.

Delving into Comprehensive Application Based and Technology Driven Market Segmentation Insights to Illuminate Distinct Opportunity Vectors

A granular examination of market segmentation reveals differentiated trajectories across application verticals, where automotive stakeholders integrate emotion recognition to enhance in-vehicle safety systems, banking and financial services institutions deploy emotion analysis to elevate customer service interactions, consumer electronics brands embed emotion sensors to personalize device experiences, healthcare providers leverage physiological and facial emotion detection for mental health monitoring, and retail and e-commerce platforms apply emotion insights to optimize customer engagement strategies. This spectrum of applications underscores the versatility of affective computing technologies in addressing specific operational challenges and user needs.

Exploring the technological segmentation, facial emotion recognition remains foundational, supported by deep convolutional networks that discern micro-expressions. Meanwhile, multimodal emotion recognition synthesizes visual, vocal, and textual cues to deliver robust emotion inference. Physiological emotion detection taps sensors to capture biometric signals such as heart rate variability, galvanic skin response, and electroencephalogram patterns, while text-based emotion recognition leverages natural language understanding to gauge sentiment from unstructured data streams. Voice emotion recognition, powered by acoustic feature extraction and prosody analysis, completes the technological portfolio, offering applications across call centers and voice assistants.

Components further diversify the ecosystem, dividing into hardware solutions-comprising high-definition cameras, sensitive microphones, an array of environmental sensors, and wearable devices-and software offerings, which include enterprise-grade platforms and developer-oriented SDKs and APIs. Deployment models span from cloud-hosted architectures that support scalable analytics to on-premise implementations prioritizing data sovereignty and low-latency processing. Finally, end-user segments range from automotive OEMs and financial institutions to electronics manufacturers, healthcare organizations, and retail and e-commerce enterprises, elucidating a comprehensive landscape of demand drivers and solution providers.

This comprehensive research report categorizes the Affective Computing market into clearly defined segments, providing a detailed analysis of emerging trends and precise revenue forecasts to support strategic decision-making.

- Application

- Technology

- Component

- Deployment Mode

Uncovering Geographic Market Dynamics and Regional Adoption Patterns That Shape Affective Computing Trajectories

The Americas have emerged as a leading region for affective computing adoption, driven by significant investments in research and development from key technology firms and strong collaboration between academic institutions and private enterprises. North American automotive OEMs and consumer electronics manufacturers are at the forefront, integrating emotion recognition features into next-generation vehicles and smart devices. At the same time, financial services hubs leverage affective analytics to refine customer engagement and risk assessment protocols. Latin America is gradually following suit, with pilot programs in healthcare and retail sectors exploring emotion-aware solutions to address local market nuances.

Across Europe, the Middle East, and Africa, regulatory frameworks emphasizing data privacy and ethical AI have shaped market development. European regulations such as the GDPR have prompted solution providers to embed privacy-by-design principles, thereby enhancing user trust. Within this region, automotive and industrial automation sectors are adopting affective computing for safety and productivity gains. Middle Eastern financial institutions are exploring emotion recognition to tailor digital banking experiences, while African healthcare initiatives are piloting physiological emotion detection to augment telemedicine services in remote areas.

In the Asia-Pacific region, dynamic growth is fueled by robust consumer electronics demand and government-backed smart city initiatives. Major technology conglomerates are investing heavily in multimodal emotion recognition research, while leading mobile device manufacturers embed advanced emotion sensors in flagship products. Healthcare providers in countries such as Japan and South Korea are integrating emotion analytics into telehealth platforms, and e-commerce giants across China and Southeast Asia leverage these insights to drive personalized shopping experiences. Collectively, the regional landscape underscores a global tapestry of innovation, regulatory adaptation, and localized use cases.

This comprehensive research report examines key regions that drive the evolution of the Affective Computing market, offering deep insights into regional trends, growth factors, and industry developments that are influencing market performance.

- Americas

- Europe, Middle East & Africa

- Asia-Pacific

Analyzing Strategic Alliances Product Innovations and Competitive Positioning Among Leading Affective Computing Providers

Key players in the affective computing space continue to expand their capabilities through strategic partnerships, product enhancements, and targeted acquisitions. Some established competitors have deepened collaborations with automotive manufacturers to co-develop in-vehicle emotion recognition modules, while others have integrated their software platforms into leading voice assistant ecosystems. Meanwhile, emerging startups specializing in physiological emotion detection have secured significant venture funding to accelerate hardware miniaturization and algorithm refinement.

Across the software domain, companies offering developer-friendly SDKs and APIs have prioritized interoperability, enabling seamless integration with existing enterprise systems and fostering a growing ecosystem of third-party applications. Hardware vendors are similarly innovating by introducing new generations of compact, low-power sensors optimized for edge-based emotion analysis. Additionally, several technology giants are leveraging their cloud infrastructures to offer end-to-end emotion analytics platforms, bundling data storage, processing, and visualization capabilities under unified service agreements.

Collectively, these corporate strategies highlight a competitive environment where differentiation stems from both technological depth and ecosystem reach. Firms investing in multimodal research, open developer communities, and partnerships across verticals are positioned to capture emerging opportunities. At the same time, those focused on compliance and ethical AI are establishing trust credentials that resonate with privacy-conscious end users, further elevating their market standing.

This comprehensive research report delivers an in-depth overview of the principal market players in the Affective Computing market, evaluating their market share, strategic initiatives, and competitive positioning to illuminate the factors shaping the competitive landscape.

- Affectiva, Inc.

- Amazon Web Services, Inc.

- Apple Inc.

- Beyond Verbal Ltd.

- Cipia Vision Ltd.

- Cogito Corporation

- Cognitec Systems GmbH

- Elliptic Laboratories ASA

- Google LLC

- Hume AI, Inc.

- iMotions A/S

- Intel Corporation

- International Business Machines Corporation

- Kairos AR, Inc.

- Microsoft Corporation

- NEC Corporation

- Nuance Communications, Inc.

- NuraLogix Corporation

- nViso SA

- Panasonic Corporation

- Qualcomm Technologies, Inc.

- Realeyes Ltd.

- Samsung Electronics Co., Ltd.

- Smart Eye AB

- SoftBank Robotics Corp.

- Sony Group Corporation

- Tobii AB

- Uniphore Software Systems

Implementing Multimodal Integration Ethical AI and Supply Chain Diversification to Futureproof Industry Leadership in Affective Computing

Industry leaders should accelerate investments in multimodal platforms that unify facial, voice, text, and physiological emotion recognition to achieve more accurate and context-rich insights. By fostering partnerships with sensor manufacturers, software providers can co-innovate to optimize hardware-software interoperability and reduce deployment complexity. Simultaneously, organizations must prioritize data privacy and ethical AI frameworks, implementing robust consent mechanisms and transparent model governance to maintain user trust and regulatory compliance.

Moreover, supply chain diversification is critical in mitigating the impact of evolving tariff regimes. Businesses should evaluate near-shore manufacturing options for critical hardware components and explore collaborative ventures with domestic foundries. On the technology front, investing in edge computing architectures can offset tariff-driven hardware costs by enabling on-device emotion analytics, reducing bandwidth dependency, and enhancing real-time responsiveness.

Finally, to fully capitalize on the global opportunity, companies should tailor their go-to-market strategies to regional regulatory landscapes and cultural nuances. This entails localizing data handling practices for markets with stringent privacy laws, aligning product features with regional use cases, and forging alliances with academic and industry consortiums. Such a multi-pronged approach will ensure that affective computing deployments are both technically robust and commercially sustainable in a rapidly evolving ecosystem.

Outlining a Robust Mixed Methodology That Combines Primary Qualitative Insights and Secondary Data Triangulation for Rigorous Analysis

The research methodology underpinning this analysis combines primary interviews with industry veterans, including technology executives, system integrators, and end-user representatives, to obtain firsthand perspectives on adoption drivers and challenges. Structured qualitative discussions were complemented by comprehensive secondary research, which involved reviewing peer-reviewed journals, patent filings, and regulatory filings to map technological trajectories and policy developments.

Data triangulation was employed to validate insights, cross-referencing findings from public company financial disclosures, government trade reports, and market advisory publications. The decomposition of market segmentation followed a rigorous framework, categorizing the landscape by application, technology, component, deployment mode, and end user. Each category was analyzed for maturity, growth enablers, and adoption barriers to generate granular insights.

To ensure reliability, statistical techniques such as regression analysis and variance decomposition were applied where quantitative data was available, while thematic coding was used to interpret qualitative interview findings. This mixed-method approach ensures that the report’s conclusions rest on a solid evidentiary foundation, offering stakeholders confidence in the strategic recommendations and market dynamics presented.

This section provides a structured overview of the report, outlining key chapters and topics covered for easy reference in our Affective Computing market comprehensive research report.

- Preface

- Research Methodology

- Executive Summary

- Market Overview

- Market Insights

- Cumulative Impact of United States Tariffs 2025

- Cumulative Impact of Artificial Intelligence 2025

- Affective Computing Market, by Application

- Affective Computing Market, by Technology

- Affective Computing Market, by Component

- Affective Computing Market, by Deployment Mode

- Affective Computing Market, by Region

- Affective Computing Market, by Group

- Affective Computing Market, by Country

- United States Affective Computing Market

- China Affective Computing Market

- Competitive Landscape

- List of Figures [Total: 16]

- List of Tables [Total: 1113 ]

Synthesizing Strategic Insights to Illuminate the Path Forward for Emotionally Intelligent Technology Adoption

In summary, affective computing has transitioned from a conceptual frontier to a critical enabler of empathetic technology interactions, with applications spanning automotive safety, personalized healthcare, intelligent retail experiences, and beyond. Advances in multimodal emotion recognition and edge computing are dismantling traditional barriers, while evolving tariff environments and ethical considerations continue to shape strategic decision-making.

By understanding the intricate interplay between hardware innovation, software optimization, and regulatory landscapes, stakeholders can navigate the complexities of global adoption. Strategic investments in research partnerships, supply chain resilience, and privacy-by-design architectures will be paramount to capitalizing on the market’s vast potential.

As organizations seek to differentiate themselves through emotionally intelligent solutions, the insights and recommendations provided in this report furnish a clear roadmap for harnessing affective computing’s transformative power. This conclusion reinforces the call to action for industry leaders to embrace innovation, collaborate across ecosystems, and adopt forward-looking strategies that align with both technological and societal imperatives.

Unlock Comprehensive Affective Computing Market Intelligence by Connecting with Ketan Rohom Your Strategic Partner for Informed Investment Decisions

We invite you to embark on a transformative journey toward unlocking the full potential of affective computing by securing the complete market research report directly from our leadership team. By engaging with Ketan Rohom, Associate Director, Sales & Marketing at 360iResearch, you will gain personalized access to in-depth data, strategic frameworks, and actionable insights tailored to your organization’s unique needs.

Partnering with Ketan Rohom ensures a seamless purchasing experience as he guides you through the report’s rich content, including detailed technology assessments, segmentation breakdowns, and regional analyses. Whether you are seeking to refine your product roadmap, strengthen supply chain resilience in light of evolving tariffs, or identify collaboration opportunities with leading innovators, this report serves as the definitive resource to drive confident decision-making and accelerate growth in the dynamic world of affective computing.

- How big is the Affective Computing Market?

- What is the Affective Computing Market growth?

- When do I get the report?

- In what format does this report get delivered to me?

- How long has 360iResearch been around?

- What if I have a question about your reports?

- Can I share this report with my team?

- Can I use your research in my presentation?