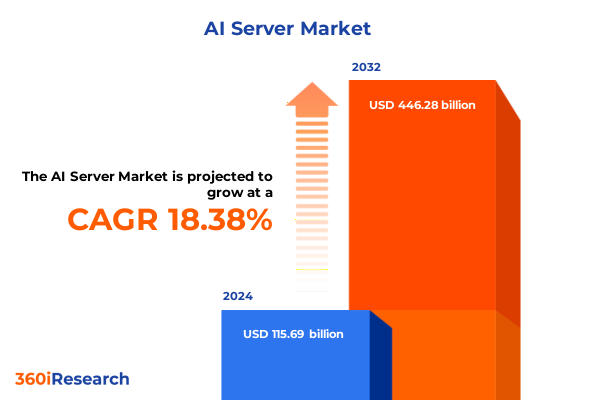

The AI Server Market size was estimated at USD 115.69 billion in 2024 and expected to reach USD 136.49 billion in 2025, at a CAGR of 18.38% to reach USD 446.28 billion by 2032.

AI servers at the heart of digital transformation as enterprises rearchitect infrastructure for data‑intensive and generative intelligence workloads

Artificial intelligence has become the defining workload for modern digital infrastructure, and AI servers now sit at the core of how organizations process, analyze, and act on data. Unlike traditional general‑purpose servers, AI‑optimized systems integrate high‑performance accelerators, high‑bandwidth memory, low‑latency interconnects, and specialized software stacks to handle the heavy parallelism required by machine learning, computer vision, natural language processing, and generative AI models. As enterprises deploy larger models and more complex pipelines, the demands placed on server compute, storage, and networking architectures are intensifying.

At the same time, the broader data center environment is undergoing rapid change. Power densities per rack have risen sharply in facilities hosting AI clusters, forcing operators to revisit long‑standing assumptions about power distribution, cooling strategies, and space utilization. Liquid and hybrid cooling designs are moving from niche experiments to mainstream options as conventional air‑cooled systems struggle to manage the heat generated by dense GPU and accelerator configurations. These physical constraints are now as central to AI server planning as chip choice or server form factor.

The AI server landscape is also being reshaped by shifts in deployment models and procurement behavior. While hyperscale cloud providers continue to lead early adoption, a growing share of enterprises are investing in dedicated AI infrastructure to support sensitive data, latency‑critical workloads, and cost optimization over the long term. Despite strong growth in cloud services, a substantial portion of global IT spending still resides in on‑premises environments, creating an enduring role for private AI clusters alongside public cloud offerings. In this context, understanding how AI server technologies, supply chains, and regulatory factors are evolving is becoming a strategic imperative rather than a technical curiosity.

This executive summary provides a structured view of that evolution. It explores how architectural innovations and deployment patterns are transforming the AI server category, how United States trade policy and export controls are influencing sourcing decisions, how demand is segmenting by workload, cooling approach, and end‑use industry, and how regional dynamics and leading companies are shaping opportunities. Together, these perspectives offer decision‑makers a coherent foundation for near‑term planning and long‑range infrastructure strategy.

Transformative shifts in AI server design, deployment, and operations are redefining performance, efficiency, and time‑to‑value across global data centers

The most profound shifts in the AI server landscape are occurring at the architectural level. Server designs are being re‑imagined around accelerators rather than CPUs, with dense configurations of GPUs and other purpose‑built processors connected by high‑speed, low‑latency fabrics. Recent generations of rack‑scale AI systems integrate dozens to hundreds of cutting‑edge accelerators into tightly coupled clusters, supported by advanced networking, high‑bandwidth memory, and optimized storage tiers. These systems are explicitly tuned for large‑scale training and inference, rather than general‑purpose compute, and are often delivered as pre‑validated building blocks that can be deployed rapidly into cloud or colocation environments.

Cooling and power delivery have become equally transformative. As AI racks routinely push beyond historical power envelopes, data center operators are embracing direct‑to‑chip and immersion liquid cooling to maintain performance and reliability while containing energy use. Independent analyses indicate that liquid cooling adoption in AI‑intensive facilities is accelerating quickly as organizations seek higher compute density and improved sustainability metrics. Facilities that once relied solely on chilled air now use hybrid approaches, dedicating liquid cooling to the most demanding AI servers while retaining air systems for lower‑density workloads, with a clear trajectory toward more pervasive liquid use over time.

Another transformative shift lies in the disaggregation of resources. AI workloads increasingly run on clusters where compute, storage, and networking can be scaled independently, enabling organizations to right‑size components according to training or inference needs. Cloud providers and large enterprises are deploying infrastructure that blurs the traditional boundaries between servers, racks, and entire data halls, treating them as composable pools of capacity. This trend is reinforced by the rise of custom accelerators and domain‑specific architectures that coexist with off‑the‑shelf GPUs, giving organizations more levers to balance performance, cost, and energy efficiency.

Finally, deployment patterns are evolving toward a continuum from core to edge. While centralized hyperscale data centers remain critical for large‑model training, inference is increasingly distributed to regional and edge locations to meet latency, data residency, and resilience requirements. Compact AI servers and edge‑optimized systems are appearing in telecom central offices, manufacturing sites, hospitals, and retail hubs, extending AI capabilities closer to where data is generated and decisions are made. Together, these shifts are redefining what it means to design, operate, and scale AI infrastructure.

Cumulative impact of evolving United States tariffs and export controls in 2025 reshapes AI server supply chains, costs, and strategic sourcing

United States trade and industrial policy is exerting a growing influence on AI server strategy, particularly through tariffs and export controls that affect critical components such as semiconductors, wafers, and specialized materials. The Section 301 tariff regime on imports from China has evolved over several years, with recent actions increasing duties on key inputs including certain tungsten products, wafers, and polysilicon. These measures, effective from 2025, are intended to support domestic semiconductor investments and reduce exposure to supply chains viewed as vulnerable or strategically sensitive. For AI server buyers and manufacturers, higher tariffs on upstream materials can translate into cost pressure along the entire value chain, even when finished servers themselves are assembled in multiple regions.

At the same time, 2025 has brought notable adjustments that partially ease the burden on downstream technology products. New reciprocal tariffs announced by the current administration initially threatened steep increases on a broad basket of electronics, including smartphones, computers, and related components. Subsequent revisions carved out significant exemptions for categories such as personal computers, servers, semiconductor devices, and displays, reducing the effective duty levels faced by major technology importers. For AI servers that rely heavily on contract manufacturing in Asia, these exemptions alleviate some near‑term cost escalation, though they do not eliminate the underlying volatility in trade policy.

Export controls add a second, more complex layer of impact. Over the past several years, the United States has applied licensing requirements to the export of advanced AI accelerators and complete systems to China, Russia, and certain other destinations, targeting high‑end GPUs and associated platforms used for large‑scale training. In 2025, the administration rescinded a broad Biden‑era rule that would have extended some restrictions to more than one hundred countries, citing concerns about innovation and diplomatic friction, while signaling that a more targeted framework will replace it. This combination of continued controls for specific geographies and a recalibrated global approach leaves suppliers and buyers navigating a patchwork of licensing and compliance obligations.

The cumulative effect of these measures is to push AI server strategies toward diversification and resilience. Manufacturers are adjusting sourcing to limit dependence on any single tariff‑exposed country, distributing production across multiple Asian, American, and European locations where feasible. Data center operators and large buyers, in turn, are weighing the benefits of closer‑to‑home assembly and configuration against potential cost savings offshore. Strategic planning now routinely incorporates scenario analysis around tariff changes, export licensing timelines, and the possibility of sudden regulatory shifts, making trade policy a central parameter in AI infrastructure roadmaps rather than a background consideration.

Deep segmentation insights reveal how workloads, processors, cooling, and deployment choices shape competitive positioning in the evolving AI server ecosystem

The AI server landscape can best be understood through the lens of how demand is segmenting across server types, processor architectures, cooling technologies, form factors, applications, industries, and deployment modes. On the server type dimension, the ecosystem is differentiating among AI training servers, AI inference servers, and AI data servers. Training servers concentrate the most powerful accelerators and interconnects to handle large‑scale model development, while inference servers are optimized for efficiency and latency as models are deployed into production. AI data servers, by contrast, are tuned for high‑throughput storage, fast retrieval, and pre‑processing, feeding clean, structured data into training and inference pipelines. As generative AI workloads grow, organizations are increasingly operating these three server types in coordinated clusters rather than as standalone assets, aligning hardware profiles with the distinct stages of the AI lifecycle.

Processor type is another critical axis of differentiation. GPUs remain the primary workhorses for both training and high‑capacity inference, thanks to their mature software ecosystems and strong performance on parallel workloads. Application‑specific integrated circuits, including custom chips developed by major cloud providers, are gaining ground in scenarios where organizations can commit to a narrower set of models and workloads in exchange for greater performance per watt. Field‑programmable gate arrays retain a focused but important role in use cases that require ultra‑low latency, deterministic behavior, or on‑the‑fly reconfiguration, such as certain telecom and industrial control applications. The choice among GPUs, ASICs, and FPGAs is increasingly strategic, reflecting not only technical requirements but also supply availability, vendor lock‑in considerations, and the pace of model evolution.

Cooling technology is emerging as a key differentiator in AI server selection. Traditional air cooling continues to serve lower‑density deployments, but as rack power levels climb, hybrid approaches that combine air cooling for general servers and liquid cooling for dense AI nodes are becoming more common. Full liquid cooling, including direct‑to‑chip and immersion designs, is transitioning from early adoption to broader use in facilities that host the most demanding AI training clusters, supported by a rapidly maturing ecosystem of components and integration expertise. These cooling choices intersect closely with form factor decisions. GPU servers designed around dense accelerator configurations, traditional rack servers, modular blade servers, and increasingly capable edge servers each offer different trade‑offs in density, serviceability, and deployment environments. High‑density racks in core data centers often favor liquid‑ready GPU and rack server designs, while branch offices, retail sites, and industrial facilities lean toward compact edge servers that can operate efficiently in constrained spaces.

Segmentation by application further sharpens these distinctions. Computer vision workloads, such as video analytics and industrial inspection, frequently demand high‑bandwidth data ingestion and real‑time inference close to where images are captured. Generative AI, spanning text, image, and multimodal models, places intense computational loads on training servers and then cascades into large fleets of inference systems supporting chat interfaces, content generation, and copilots. Classical machine learning continues to underpin recommendation engines, fraud detection, and optimization tasks, often running alongside deep learning workloads on shared infrastructure. Natural language processing has become ubiquitous across customer service, search, and knowledge management, driving demand for servers that can handle both batch processing and interactive, low‑latency queries.

End‑use industries are adopting these capabilities at different paces and with unique priorities. Information technology and telecom providers, including cloud platforms and carriers, remain the largest consumers of AI servers, building shared infrastructure for a wide variety of downstream customers. Banking, financial services, and insurance institutions focus heavily on risk management, fraud detection, and personalized services, often requiring a mix of on‑premises and cloud deployments for regulatory reasons. Healthcare and life sciences organizations prioritize data privacy, compliance, and high‑availability clinical decision support, while manufacturing and industrial users emphasize predictive maintenance, quality control, and robotics. Retail and ecommerce players deploy AI servers to refine recommendations, inventory management, and customer experiences, and government and defense entities use them for intelligence, cybersecurity, and mission planning under strict security regimes. Automotive and transportation stakeholders are investing in simulation, autonomous driving development, and fleet optimization, and education and research institutions leverage AI clusters for scientific computing and advanced analytics.

Finally, deployment mode segmentation highlights the tension and synergy between cloud‑based and on‑premises AI servers. Cloud‑based deployments provide rapid access to cutting‑edge accelerators and elastic capacity, making them attractive for experimentation, bursty training jobs, and organizations without the appetite for large capital investments. On‑premises deployments, however, retain strong appeal where data sovereignty, latency, predictable long‑term costs, or integration with existing operational technology are paramount. Industry commentary indicates that a substantial share of global IT spending still resides on‑premises even as cloud services grow quickly, reinforcing the importance of hybrid AI architectures that span both modes. Across these segmentation dimensions, the most successful strategies are those that align server type, processor choice, cooling strategy, form factor, application mix, industry requirements, and deployment model into a coherent, workload‑centric design.

This comprehensive research report categorizes the AI Server market into clearly defined segments, providing a detailed analysis of emerging trends and precise revenue forecasts to support strategic decision-making.

- Server Type

- Processor Type

- Cooling Technology

- Form Factor

- Application

- End Use Industry

- Deployment Mode

Regional dynamics across the Americas, Europe, Middle East, Africa, and Asia‑Pacific create distinct AI server investment patterns and partnership models

Regional dynamics add another layer of complexity to AI server planning, with the Americas, Europe, the Middle East and Africa, and Asia‑Pacific each exhibiting distinct patterns of investment, regulation, and ecosystem maturity. In the Americas, the United States anchors the market through hyperscale cloud providers, leading semiconductor companies, and a growing cohort of specialized GPU cloud and colocation providers. Contract manufacturers are expanding AI server production capacity in North America, both to serve domestic demand and to mitigate exposure to cross‑border trade frictions. Canada and Latin America are seeing increased data center construction in key hubs, driven by cloud region expansion, fintech growth, and government digitalization initiatives, though supply chain constraints and energy infrastructure still shape project timelines.

Across Europe, the Middle East, and Africa, AI server deployment is influenced strongly by regulatory and sustainability frameworks. European countries are moving ahead with stringent data protection and emerging AI governance rules, pushing organizations toward architectures that can demonstrate privacy, transparency, and responsible use. This has implications for where AI servers are located, how data is partitioned, and which cloud or colocation partners are selected. At the same time, European operators are among the most proactive in adopting energy‑efficient cooling and power practices, aligning AI server rollouts with aggressive decarbonization targets. In the Middle East, rapid investment in national AI strategies and smart city initiatives is creating demand for high‑performance training and inference clusters, sometimes intersecting with historical export‑control constraints on certain high‑end accelerators, which in turn encourages the development of regional supply options and collaborations. Parts of Africa are in earlier stages of build‑out, but new regional data centers and connectivity projects are laying the groundwork for broader AI adoption.

Asia‑Pacific combines deep manufacturing capacity with fast‑growing local consumption of AI servers. Economies such as Taiwan, South Korea, and parts of Southeast Asia play vital roles in producing components, assembling servers, and integrating advanced cooling solutions that are then shipped worldwide. At the same time, countries including Japan, India, Singapore, and Australia are scaling domestic AI infrastructure to support national digital agendas, cloud expansion, and industry‑specific initiatives. China represents a unique case, with strong internal demand, large‑scale data center construction, and extensive local technology ecosystems, yet continued exposure to United States export controls on certain advanced AI chips and platforms. Across the region, accelerating adoption of liquid cooling and high‑density rack designs underscores Asia‑Pacific’s central role in both innovating and manufacturing next‑generation AI servers. For global buyers, these regional nuances underscore the importance of tailored sourcing, partnership, and risk‑management strategies that align with the specific opportunities and constraints in each geography.

This comprehensive research report examines key regions that drive the evolution of the AI Server market, offering deep insights into regional trends, growth factors, and industry developments that are influencing market performance.

- Americas

- Europe, Middle East & Africa

- Asia-Pacific

Strategic moves by leading chipmakers, server OEMs, cloud providers, and integrators accelerate innovation and ecosystem consolidation in AI servers

The AI server ecosystem is being shaped decisively by the strategies of leading chipmakers, server original equipment manufacturers, cloud providers, and integrators. On the silicon side, companies developing high‑performance GPUs and other accelerators continue to set the pace of innovation. Successive generations of data center GPUs have introduced higher compute density, faster memory, and improved interconnects, enabling ever larger and more complex AI models. At the same time, these vendors navigate an evolving regulatory environment, with licensing requirements affecting shipments of top‑tier accelerators and associated systems to certain countries and end users. Their responses-ranging from creating region‑specific product variants to investing in diversified manufacturing and packaging capacity-directly influence what AI servers are available, at what cost, and on what timelines.

Server manufacturers are translating these silicon advances into deployable platforms. Established OEMs have launched specialized AI server families that support dense configurations of state‑of‑the‑art accelerators, often in both air‑cooled and liquid‑cooled variants. Some of the newest systems are designed to host very large numbers of cutting‑edge GPUs in a single rack, targeting demanding training and inference environments and aligning with accelerator roadmaps that emphasize rack‑scale integration. Other vendors focus on more modular designs that allow enterprises to incrementally add AI capacity within existing data center footprints. Meanwhile, contract manufacturers and original design manufacturers, including major electronics assemblers in Asia, are scaling AI server production and building facilities closer to key end markets, particularly in North America, to support nearshoring and faster delivery.

Cloud providers and large service operators are both customers and competitors to traditional hardware vendors. Hyperscale platforms procure vast quantities of AI servers, but they also design custom systems that integrate proprietary accelerators, networks, and software stacks. These organizations are moving quickly to roll out general‑purpose AI infrastructure services as well as vertically tailored offerings for industries such as healthcare, financial services, and manufacturing. Their scale gives them significant influence over component roadmaps, interface standards, and open‑source software directions. Specialized GPU cloud providers and colocation operators are also emerging as important players, offering access to high‑end AI servers for organizations that prefer not to own and operate hardware directly.

A growing ecosystem of integrators, system builders, and cooling specialists rounds out the picture. These firms help enterprises specify, deploy, and optimize AI servers for their particular workloads, facilities, and regulatory contexts. Integrators are increasingly called upon to design hybrid environments that combine on‑premises clusters with public cloud resources, orchestrated through unified management and observability tools. Cooling and power specialists, in turn, collaborate with server vendors and data center operators to implement liquid cooling, power distribution, and monitoring solutions that can sustain high‑density AI deployments. Across this ecosystem, competitive advantage is shifting toward organizations that can pair technical innovation with robust supply chain management, regulatory fluency, and deep understanding of end‑user requirements.

This comprehensive research report delivers an in-depth overview of the principal market players in the AI Server market, evaluating their market share, strategic initiatives, and competitive positioning to illuminate the factors shaping the competitive landscape.

- ADLINK Technology Inc.

- Advanced Micro Devices, Inc.

- Amazon Web Services, Inc.

- ASUSTeK Computer Inc.

- Baidu, Inc.

- Cerebras Systems Inc.

- Cisco Systems, Inc.

- Dataknox Solutions, Inc.

- Dell Technologies Inc.

- Fujitsu Limited

- GeoVision Inc.

- GIGA-BYTE Technology Co., Ltd.

- Google LLC by Alphabet Inc.

- Hewlett Packard Enterprise Company

- Huawei Technologies Co., Ltd.

- IEIT SYSTEMS

- Inspur Group

- Intel Corporation

- International Business Machines Corporation

- Lenovo Group Limited

- M247 Europe S.R.L.

- Microsoft Corporation

- MiTAC Computing Technology Corporation

- NVIDIA Corporation

- Oracle Corporation

- Quanta Computer lnc.

- SNS Network

- Super Micro Computer, Inc.

- Wistron Corporation

Actionable recommendations to de‑risk investments, modernize infrastructure, and capture emerging AI server opportunities across industries and geographies

In this environment of rapid innovation, regulatory complexity, and intense competition, industry leaders need a disciplined approach to AI server strategy. A first priority is to align infrastructure decisions tightly with business outcomes and workload requirements. Rather than pursuing AI servers as a generic capability, organizations should map specific use cases-such as conversational agents, predictive maintenance, fraud analytics, or imaging diagnostics-to performance, latency, data residency, and resilience needs. This mapping then informs choices among AI training servers, inference servers, and AI data servers, as well as among GPUs, ASICs, and FPGAs, ensuring that capital investments translate into measurable operational and customer impact.

A second recommendation is to treat power and cooling as strategic constraints rather than afterthoughts. As AI racks drive up energy density, investing early in hybrid or liquid‑ready cooling designs, upgraded power distribution, and detailed capacity planning can prevent costly retrofits and service disruptions later. Close collaboration among facilities teams, IT architects, and server vendors is essential to validate that prospective AI server configurations align with site‑level capabilities and sustainability targets. Where possible, leaders should also evaluate colocation and cloud options that can absorb peak training loads while allowing on‑premises environments to focus on steady‑state inference and mission‑critical applications.

Supply chain resilience is a third pillar. Given the interplay of tariffs, export controls, and geopolitical factors, organizations should identify and qualify multiple sources for key AI server components and integration services. This may include leveraging vendors with diversified manufacturing footprints, exploring regional assembly for strategic systems, and building contractual flexibility to adapt to regulatory changes. Procurement teams should work closely with legal and risk functions to monitor policy developments and to incorporate scenario‑based clauses into major infrastructure agreements.

Finally, leaders should invest in the organizational capabilities needed to extract value from AI servers over time. This includes building cross‑functional teams that combine data science, software engineering, infrastructure operations, and security expertise; establishing governance for AI model lifecycle management; and implementing observability frameworks that track performance, utilization, and cost across hybrid environments. Continuous training and knowledge‑sharing programs help ensure that teams can keep pace with new server architectures, accelerators, and deployment patterns. By grounding AI server investments in clear objectives, robust engineering, resilient supply chains, and strong organizational capabilities, enterprises can position themselves to capture enduring benefits rather than short‑lived experimentation gains.

Robust research methodology combining primary insights, secondary intelligence, and rigorous validation to deliver dependable AI server market analysis

Delivering reliable insight on a fast‑moving domain such as AI servers requires a research methodology that balances breadth of coverage with depth of technical and strategic understanding. The analytical approach behind this executive summary begins with secondary research drawn from a wide spectrum of publicly available sources, including technology vendor disclosures, standards bodies, policy announcements, data center operator publications, and independent technical analyses. This material provides visibility into product roadmaps, architectural trends, regulatory frameworks, and infrastructure practices across regions and industries.

To complement this foundation, primary insights from industry participants are incorporated wherever possible. These include perspectives from data center operators, cloud providers, hardware and silicon vendors, integrators, and enterprise users in sectors such as financial services, healthcare, manufacturing, and government. Structured interviews and expert consultations help validate interpretations of technical developments, clarify how organizations are prioritizing AI workloads, and highlight emerging pain points and success factors in real‑world deployments.

Analytically, the research framework organizes findings along the segmentation dimensions central to AI servers: server type, processor type, cooling technology, form factor, application, end‑use industry, deployment mode, and region. Cross‑segmentation views are used to explore how, for example, certain industries favor particular combinations of processors and cooling approaches, or how regional policy environments influence deployment choices. Scenario analysis is applied to assess the potential impact of changes in tariffs, export controls, and technology roadmaps on sourcing and deployment strategies, without asserting specific numerical projections or financial outcomes.

Throughout, emphasis is placed on internal consistency and traceability. Insights are cross‑checked against multiple sources and viewpoints, and qualitative judgments are clearly distinguished from factual observations where appropriate. This methodology is designed to provide decision‑makers with a robust, transparent basis for understanding the AI server landscape, while avoiding over‑reliance on any single data point or stakeholder perspective.

This section provides a structured overview of the report, outlining key chapters and topics covered for easy reference in our AI Server market comprehensive research report.

- Preface

- Research Methodology

- Executive Summary

- Market Overview

- Market Insights

- Cumulative Impact of United States Tariffs 2025

- Cumulative Impact of Artificial Intelligence 2025

- AI Server Market, by Server Type

- AI Server Market, by Processor Type

- AI Server Market, by Cooling Technology

- AI Server Market, by Form Factor

- AI Server Market, by Application

- AI Server Market, by End Use Industry

- AI Server Market, by Deployment Mode

- AI Server Market, by Region

- AI Server Market, by Group

- AI Server Market, by Country

- United States AI Server Market

- China AI Server Market

- Competitive Landscape

- List of Figures [Total: 19]

- List of Tables [Total: 1272 ]

AI servers emerge as the foundational infrastructure layer for the next decade of intelligent applications, demanding decisive and forward‑looking strategies

Taken together, the trends outlined in this executive summary point to a clear conclusion: AI servers are no longer a niche category within data centers, but the foundational infrastructure layer for a wide array of digital initiatives. As organizations embed AI into customer interactions, operational processes, and strategic decision‑making, the performance, efficiency, and resilience of AI servers increasingly define what is possible. The shift toward accelerator‑centric architectures, high‑density racks, advanced cooling, and hybrid deployment models reflects a structural reconfiguration of compute rather than a transient technology cycle.

At the same time, the context in which AI servers are deployed is growing more complex. Trade policies, tariffs, and export controls now have direct implications for system design and sourcing strategies. Regional regulations around data protection, AI governance, and sustainability influence where servers can be located and how they must be operated. Competition among chipmakers, server vendors, cloud platforms, and integrators is intensifying, bringing rapid innovation but also raising the bar for interoperability, supply assurance, and lifecycle support.

In this environment, success will favor organizations that combine technical excellence with strategic foresight and operational discipline. Aligning AI server investments with clearly defined use cases, robust power and cooling strategies, diversified supply chains, and strong organizational capabilities can transform AI from an experimental capability into a durable competitive advantage. The insights summarized here are intended to help leaders navigate that journey, providing a structured view of the forces reshaping AI infrastructure and the choices that matter most over the coming planning cycles.

Take the next step with tailored AI server intelligence by engaging Ketan Rohom to unlock deeper strategic and operational decision support

Securing a leadership position in the AI infrastructure race requires more than a high-level understanding of technology trends. It demands access to structured, comparable, and deeply contextualized intelligence on AI servers across server types, processor architectures, cooling technologies, deployment modes, and regions. That level of insight is difficult to assemble internally while teams are simultaneously executing large transformation programs and negotiating complex supplier and cloud agreements.

To move from broad awareness to confident action, consider engaging directly with Ketan Rohom, Associate Director, Sales & Marketing, to obtain the full market research report on the AI server landscape. The report consolidates primary and secondary insights into a single, decision-ready resource that helps you understand where demand is concentrating, how architectural choices are evolving, and which ecosystem partners are shaping high‑value opportunities. It translates a fast‑moving technical environment into a clear view of risks, trade‑offs, and implementation options.

By working with Ketan, leadership teams can quickly align on a fact‑based view of the AI server ecosystem and identify the chapters and analytical lenses that map most closely to their own priorities, whether that is navigating tariffs, evaluating liquid cooling investments, or balancing cloud-based and on‑premises deployments. This engagement is not just about acquiring a document; it is about equipping your organization with a structured framework for ongoing AI infrastructure decisions.

If your organization is preparing material capital commitments, renegotiating cloud or colocation contracts, or building differentiated AI services, now is the right moment to secure this intelligence. Coordinating with Ketan Rohom ensures you have direct support in selecting the most relevant deliverables and in turning the report’s insights into concrete, time‑bound next steps for your AI server strategy.

- How big is the AI Server Market?

- What is the AI Server Market growth?

- When do I get the report?

- In what format does this report get delivered to me?

- How long has 360iResearch been around?

- What if I have a question about your reports?

- Can I share this report with my team?

- Can I use your research in my presentation?