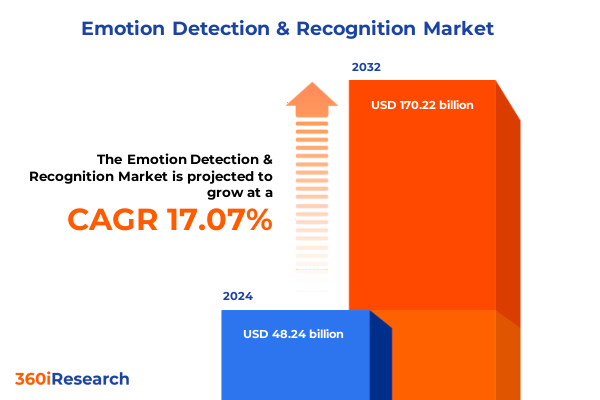

The Emotion Detection & Recognition Market size was estimated at USD 55.14 billion in 2025 and expected to reach USD 63.03 billion in 2026, at a CAGR of 17.47% to reach USD 170.22 billion by 2032.

Unveiling the foundational significance of emotion detection and recognition solutions that are redefining stakeholder engagement and decision intelligence

Unveiling the foundational significance of emotion detection and recognition solutions that are redefining stakeholder engagement and decision intelligence

Emotion detection and recognition technologies are rapidly transforming how organizations interpret human cues and translate them into actionable insights. By analyzing facial microexpressions, vocal intonations, textual sentiment, and physiological signals, enterprises can now refine user experiences, strengthen customer relationships, and optimize operational processes. The convergence of artificial intelligence and advanced sensor hardware has democratized access to emotion analytics, enabling both established enterprises and agile newcomers to infuse emotional intelligence into product design, marketing campaigns, and safety systems.

As businesses grapple with evolving consumer expectations and intensifying competition, the ability to perceive and respond to underlying emotional states has emerged as a strategic differentiator. Organizations are leveraging these capabilities to refine personalization algorithms, elevate brand loyalty, and drive empathetic customer service. At the same time, regulatory frameworks and privacy considerations are maturing, creating a balanced environment that fosters innovation while safeguarding individual rights.

This executive summary provides a holistic overview of the emotion detection and recognition landscape in 2025. It synthesizes recent technological shifts, examines the impact of new trade policies, and unpacks critical segmentation and regional dynamics. By distilling key company strategies and offering actionable recommendations, this summary equips industry stakeholders with the insights needed to navigate complexity, align investments, and capture emerging opportunities.

Examining the paradigm shifts driving emotion AI advances from multimodal analytics to ethical design frameworks accelerating enterprise adoption

Examining the paradigm shifts driving emotion AI advances from multimodal analytics to ethical design frameworks accelerating enterprise adoption

The past year has witnessed a surge in multimodal emotion analytics, where facial expression recognition, voice analysis, physiological monitoring, and text sentiment tools seamlessly interoperate. This shift toward unified, cross-modal platforms has enhanced accuracy and contextual richness, enabling applications ranging from automotive safety systems that detect driver fatigue to healthcare tools that monitor patient stress levels. Concurrently, breakthroughs in edge computing have allowed real-time inference directly on devices, reducing latency and bolstering data privacy.

In parallel, the industry has matured its approach to ethical design, embedding privacy-by-design principles, bias mitigation protocols, and transparent model explainability into product roadmaps. Governance frameworks and standards bodies have introduced guidelines that ensure emotion AI deployments adhere to ethical norms, fostering user trust and regulatory compliance. At the same time, innovations in synthetic data generation and privacy-preserving machine learning are mitigating data scarcity challenges and advancing model robustness.

These transformative shifts have collectively lowered barriers to adoption while elevating the strategic value of emotion detection in revenue generation, risk management, and human-centered innovation. As organizations integrate these emerging capabilities, they unlock new pathways to proactive decision-making, creating a competitive frontier marked by empathy-driven engagement and operational excellence.

Assessing how evolving United States tariff policies in 2025 are reshaping global supply chains for emotion detection hardware and software innovators

Assessing how evolving United States tariff policies in 2025 are reshaping global supply chains for emotion detection hardware and software innovators

In 2025, a recalibration of United States tariff policies has introduced heightened duties on certain electronic components, sensor modules, and semiconductor imports widely used in emotion detection hardware. These measures, aimed at bolstering domestic technology manufacturing, have led to material cost increases for hardware providers that rely on offshore assembly and chip fabrication. As a result, many established vendors have reevaluated their procurement networks, shifting toward regional suppliers or pursuing joint ventures to secure tariff exemptions through localized production.

The ripple effects have been profound: hardware vendors are redesigning devices to leverage alternative sensor technologies that qualify for lower tariff brackets, while software-centric players are intensifying partnerships with cloud infrastructure providers to offset hardware premium pressures through service-based revenue models. In addition, increased duties have accelerated the emergence of domestic foundries and electronics assemblers, creating opportunities for nearshore supply chain resilience and reduced lead times.

Despite upward cost pressures, some organizations have harnessed this policy environment as an innovation catalyst, investing in modular hardware architectures and open standards to diversify supplier bases. Concurrently, service providers in the ecosystem are absorbing marginal cost impacts through subscription pricing flexibility, thereby preserving adoption momentum among large enterprises and small-to-medium business customers alike.

Mapping critical segmentation dimensions that drive strategic decision-making across hardware, technology modalities, deployment frameworks, and applications

Mapping critical segmentation dimensions that drive strategic decision-making across hardware, technology modalities, deployment frameworks, and applications

An analysis based on components reveals that hardware modules, cloud-based and on-premises software suites, and professional services each play distinct yet complementary roles in delivering comprehensive emotion detection solutions. Hardware innovations, such as next-generation camera systems and wearable sensors, provide the foundational data capture capabilities. Software firms deliver analytics engines, while services organizations ensure successful integration, training, and support.

Within the technology segmentation, facial expression analysis continues to lead in consumer research and automotive safety, while physiological signal analysis has gained traction in healthcare monitoring. Text sentiment analysis excels in social media intelligence and customer feedback, and voice analysis empowers call center optimization and virtual assistant refinement. Deployments in hybrid cloud architectures are becoming an industry norm, balancing scalability and data sovereignty, whereas private and public cloud models address enterprise-specific security and cost requirements.

Examining application domains underscores growth in automotive driver monitoring systems, financial service risk assessment, government defense situational awareness, healthcare patient engagement tools, marketing campaign personalization, and retail shopper experience enhancement. Large enterprises often adopt fully integrated platforms with dedicated service agreements, while small and midsize organizations favor modular solutions that enable incremental investments and rapid deployment.

This comprehensive research report categorizes the Emotion Detection & Recognition market into clearly defined segments, providing a detailed analysis of emerging trends and precise revenue forecasts to support strategic decision-making.

- Component

- Technology

- Deployment Mode

- Application

- Organization Size

Delving into the diverse regional dynamics that shape emotion detection adoption across the Americas, Europe Middle East Africa, and Asia Pacific hubs

Delving into the diverse regional dynamics that shape emotion detection adoption across the Americas, Europe Middle East Africa, and Asia Pacific hubs

In the Americas, North American organizations are pioneering large-scale pilot programs in retail analytics, automotive safety, and telehealth, leveraging advanced privacy regulations as a trust enabler. Supply chain proximity to major chip foundries in the United States and Mexico has further accelerated hardware innovation, while robust venture capital funding continues to fuel startup ecosystems in Silicon Valley and Toronto.

Within the Europe, Middle East and Africa region, stringent data protection laws have spurred solutions that prioritize on-premises and private cloud deployments. Public sector agencies across Western Europe have integrated emotion recognition into security and defense applications, adhering to rigorous ethical oversight. Simultaneously, Gulf Cooperation Council nations and select African markets are exploring pilot programs in healthcare and call center optimization, enabled by government-funded innovation grants.

In the Asia Pacific, high population density and diverse linguistic landscapes have driven demand for scalable cloud-based emotion analysis platforms. Key markets such as Japan and South Korea lead in consumer electronics integration, while China’s expanding research initiative in multimodal affective computing propels domestic technology advances. Across Southeast Asia and Oceania, enterprises of all sizes are prioritizing user experience differentiation, spurring cross-border partnerships with global emotion AI vendors.

This comprehensive research report examines key regions that drive the evolution of the Emotion Detection & Recognition market, offering deep insights into regional trends, growth factors, and industry developments that are influencing market performance.

- Americas

- Europe, Middle East & Africa

- Asia-Pacific

Highlighting the leading innovators and strategic collaborators at the forefront of emotion detection and recognition advancements across multiple disciplines

Highlighting the leading innovators and strategic collaborators at the forefront of emotion detection and recognition advancements across multiple disciplines

Major platform providers specializing in computer vision have fortified their portfolios by embedding emotion analysis modules into broader AI suites, while sensor manufacturers are co-developing custom camera and wearable arrays with leading research institutions. Startups focusing on synthetic dataset generation and bias reduction techniques have attracted significant strategic investment from software conglomerates, enabling platform vendors to enhance model transparency and fairness.

Consultancy and system integrator firms are establishing dedicated centers of excellence for emotion AI, offering turnkey solutions that include algorithm fine-tuning, pilot program management, and change management services. Meanwhile, cloud service providers are competing to provide specialized infrastructure stacks optimized for low-latency inference and secure data handling. Cross-industry alliances among automotive OEMs, healthcare groups, and telecom operators are also emerging, designed to accelerate real-world deployments and drive co-innovation roadmaps.

These collaborative dynamics underscore an ecosystem where hardware developers, algorithm specialists, service firms, and end-user organizations coalesce to deliver end-to-end emotion detection solutions. The result is a more resilient and interoperable market environment, where strategic partnerships and open innovation models reduce time to market and amplify technology impact.

This comprehensive research report delivers an in-depth overview of the principal market players in the Emotion Detection & Recognition market, evaluating their market share, strategic initiatives, and competitive positioning to illuminate the factors shaping the competitive landscape.

- Affectiva, Inc.

- Amazon.com, Inc.

- Apple Inc.

- Beyond Verbal Communications Ltd.

- Google LLC

- iMotions ApS

- International Business Machines Corporation

- Kairos Face Recognition, Inc.

- Microsoft Corporation

- NEC Corporation

- Noldus Information Technology BV

- NVISO SA

- Q3 Technologies Inc.

- Realeyes, Inc.

- Sightcorp B.V.

- Smart Eye AB

- Tobii AB

- Uniphore Technologies Inc.

- Verint Systems Inc.

Offering practical guidance and targeted initiatives for industry leaders to capitalize on emotion insights while mitigating implementation complexities

Offering practical guidance and targeted initiatives for industry leaders to capitalize on emotion insights while mitigating implementation complexities

Organizations should begin by defining clear use cases that align with strategic priorities, whether it be elevating customer experience, enhancing workforce well-being, or strengthening safety systems. By initiating small-scale proof of concept trials, stakeholders can validate technology performance, assess user acceptance, and refine data governance frameworks before committing to enterprise-wide rollouts.

To address ethical and compliance challenges, teams must establish cross-functional governance councils that include legal, privacy, and ethics experts alongside data scientists. This ensures that data collection and model training adhere to regulatory requirements and internal ethical standards. Additionally, investment in bias detection and model explainability tools will bolster transparency and foster stakeholder trust.

From a technological standpoint, balancing edge and cloud deployments can optimize performance while maintaining data sovereignty. Industry leaders should cultivate partnerships with specialists in cloud infrastructure, sensor hardware, and professional services to create integrated solution roadmaps. Finally, upskilling internal talent through targeted training programs and certifications will accelerate adoption, enabling organizations to embed emotional intelligence at the core of their decision-making processes.

Detailing the rigorous research approaches and analytical frameworks employed to ensure empirical integrity while revealing key emotion detection insights

Detailing the rigorous research approaches and analytical frameworks employed to ensure empirical integrity while revealing key emotion detection insights

This research leverages a dual-pronged methodology combining extensive primary interviews with industry experts and decision-makers, alongside in-depth secondary analysis of academic publications, patent filings, and open-source technology repositories. Over seventy structured interviews were conducted with stakeholders across hardware development, software engineering, regulatory bodies, and end-user organizations to capture qualitative perspectives on innovation drivers and adoption barriers.

Secondary research involved reviewing scholarly journals, conference proceedings, and regulatory whitepapers to map technological advancements and emerging best practices. Proprietary data triangulation techniques were applied to reconcile qualitative inputs with observable market activities, including partnership announcements, patent grants, and pilot program outcomes. Quantitative analysis of investment funding rounds and M&A transactions provided additional context on competitive dynamics.

Throughout the process, rigorous validation workshops were held with subject matter experts to test findings, challenge assumptions, and refine conclusions. This iterative feedback loop ensured that final insights reflect real-world realities, providing decision-makers with a reliable foundation for strategic planning and technology investments in the emotion detection domain.

This section provides a structured overview of the report, outlining key chapters and topics covered for easy reference in our Emotion Detection & Recognition market comprehensive research report.

- Preface

- Research Methodology

- Executive Summary

- Market Overview

- Market Insights

- Cumulative Impact of United States Tariffs 2025

- Cumulative Impact of Artificial Intelligence 2025

- Emotion Detection & Recognition Market, by Component

- Emotion Detection & Recognition Market, by Technology

- Emotion Detection & Recognition Market, by Deployment Mode

- Emotion Detection & Recognition Market, by Application

- Emotion Detection & Recognition Market, by Organization Size

- Emotion Detection & Recognition Market, by Region

- Emotion Detection & Recognition Market, by Group

- Emotion Detection & Recognition Market, by Country

- United States Emotion Detection & Recognition Market

- China Emotion Detection & Recognition Market

- Competitive Landscape

- List of Figures [Total: 17]

- List of Tables [Total: 1113 ]

Synthesizing the overarching themes and forward-looking perspectives that underscore the critical importance of emotion detection in shaping future strategies

Synthesizing the overarching themes and forward-looking perspectives that underscore the critical importance of emotion detection in shaping future strategies

The rapid evolution of emotion detection and recognition technologies has created a new frontier in human-centric innovation, where understanding and responding to emotional signals drives competitive differentiation. From multimodal analytics breakthroughs to privacy-preserving edge deployments, the industry is converging on interoperable solutions that balance accuracy, scalability, and ethical integrity.

As trade policies and regional regulations continue to influence supply chain configurations, technology providers and end users must remain agile, embracing modular architectures and flexible sourcing strategies. Strategic partnerships among hardware specialists, cloud service providers, and professional services firms will be essential in navigating cost pressures and accelerating time to deployment.

Looking ahead, the integration of emotion AI into standard enterprise toolchains-from CRM systems to safety monitoring platforms-will redefine stakeholder engagement and unlock new value streams. Organizations that proactively adopt governance frameworks, invest in talent development, and pilot innovative use cases will be best positioned to lead this transformation, setting new benchmarks for empathy-driven decision-making and sustainable growth.

Inspire action to engage with Ketan Rohom for unparalleled insights and access to the full emotion detection and recognition market research report

This comprehensive research report represents the definitive resource for organizations seeking to harness the power of emotion detection and recognition. By engaging with Ketan Rohom, Associate Director of Sales & Marketing, decision-makers can unlock tailored insights, gain privileged advisory support, and accelerate technology adoption journeys that maximize stakeholder engagement and operational resilience. Reach out to explore bespoke licensing options, engage in interactive executive briefings, and secure early access to updated findings that will drive competitive advantage in an increasingly emotion-intelligent world.

- How big is the Emotion Detection & Recognition Market?

- What is the Emotion Detection & Recognition Market growth?

- When do I get the report?

- In what format does this report get delivered to me?

- How long has 360iResearch been around?

- What if I have a question about your reports?

- Can I share this report with my team?

- Can I use your research in my presentation?