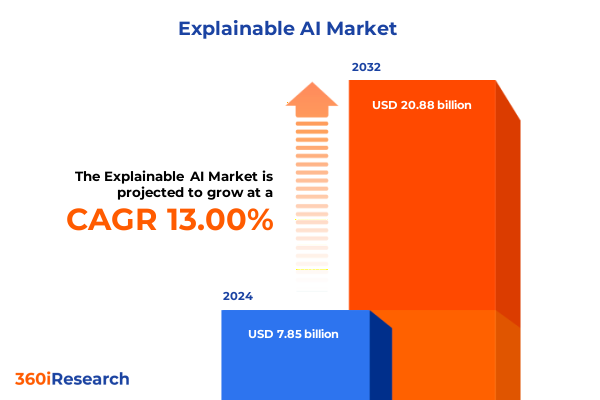

The Explainable AI Market size was estimated at USD 8.83 billion in 2025 and expected to reach USD 9.93 billion in 2026, at a CAGR of 13.08% to reach USD 20.88 billion by 2032.

Unveiling the Imperative of Transparency in Artificial Intelligence to Empower Decision-Makers with Actionable Clarity and Fostering Ethical AI Adoption

The rapid proliferation of artificial intelligence across industries has brought unprecedented capabilities in automation, prediction, and personalization, but it has also underscored the urgent necessity for transparency and interpretability. Explainable AI emerges as the cornerstone for bridging the gap between complex algorithmic processes and human understanding. By providing clear, traceable pathways for how models arrive at decisions, explainable AI not only cultivates stakeholder confidence but also addresses ethical, legal, and operational concerns that accompany high-stakes deployments. Consequently, organizations are pressured to adopt rigorous transparency measures to meet evolving compliance requirements and to maintain competitive differentiation.

In parallel, international regulatory bodies are codifying specific transparency obligations into law. The European Union’s Artificial Intelligence Act, which took effect on August 1, 2024, and introduces transparency mandates spanning minimal-risk to high-risk systems, exemplifies this trend by categorizing AI applications into distinct risk tiers and imposing significant fines for non-compliance. In North America, while federal oversight remains nascent, state-level legislation and voluntary frameworks signal a trajectory toward fortified governance. This executive summary distills the foundations of explainable AI’s ascent, outlines key market shifts, and illuminates strategic pathways for organizations to harness transparent AI models as a sustainable source of trust and innovation.

Charting the Paradigm-Shifting Innovations That Are Reshaping Explainable AI Infrastructure and Governance Models and Empowering Stakeholder Confidence in Automated Systems

Over the past year, advances in model interpretability have transcended post-hoc analysis and have been embedded directly into AI architectures. The emergence of Constrained Concept Refinement methodologies, developed by leading academic institutions, has facilitated real-time interpretability by integrating transparent concept embeddings that maintain accuracy while dramatically reducing run times. Likewise, hybrid neuro-symbolic frameworks are converging the pattern-recognition prowess of deep learning with the logical rigor of symbolic reasoning, offering deterministic explanations in sectors where validation and auditability are paramount, such as healthcare and finance.

Simultaneously, user-centric capabilities have matured through interactive explanation systems that enable stakeholders to pose “what-if” scenarios and receive instantaneous feedback on decision pathways. Major cloud providers have piloted these conversational explanation interfaces within their AI platforms, introducing a new dimension of human-in-the-loop validation. Complementing these user-facing tools, market-wide efforts to standardize benchmarking datasets and establish explainability metrics are gaining momentum, forming consistent evaluation frameworks that drive interoperability and foster cross-organizational collaboration.

Evaluating the Compounding Effects of 2025 U.S. Tariff Policies on Global Explainable AI Supply Chains and Cost Structures

In April 2025, sweeping reciprocal tariffs announced by the U.S. administration introduced duties on a broad array of imported technology components, directly impacting the cost base for AI hardware and infrastructure. Although raw semiconductor chips initially received temporary exemptions, subsequent signals from policymakers have implied pending duties on even raw wafers, while assembled modules containing GPUs remain fully subject to tariffs. Consequently, the incremental cost of GPUs and server systems integral to data centers rose sharply, affecting both large cloud operators and emerging AI startups.

Analysts estimate that these tariffs, which include duties of up to 145% on certain goods from key trading partners, have elevated capital expenditure forecasts for data center expansions and prompted leading technology firms to reassess project timelines. While import duties aim to bolster domestic manufacturing, the immediate outcome has been supply chain realignments and sourcing diversification as companies pivot toward tariff-exempt jurisdictions. In the near term, this policy shift increases the total cost of ownership for AI deployments and may restrain innovation velocity, particularly for smaller organizations with limited procurement flexibility.

Deriving Deep Insights from Multidimensional Explainable AI Market Segmentation to Guide Tailored Solutions Across End-Use Scenarios

Dissecting the explainable AI landscape by component reveals a bifurcated offering: Services, which span consulting, support and maintenance, and system integration, and Software, encompassing comprehensive AI platforms alongside frameworks and tools. The divergence in delivery models underscores distinct value propositions, as service-led engagements rely heavily on domain expertise and customization, whereas software-based solutions emphasize scalability and productized interpretability capabilities.

From a methodological perspective, solutions range from data-driven approaches that extract feature attributions and perform sensitivity analyses to knowledge-driven systems that leverage ontologies and rule-based inference for logical traceability. These methodologies intersect with technological domains such as computer vision, deep learning, machine learning, and natural language processing, enabling specialized interpretability techniques tailored to each paradigm. Within software types, the dichotomy between integrated suites and standalone modules influences deployment flexibility, with cloud-based implementations offering rapid provisioning and seamless updates, juxtaposed against on-premise options that deliver stricter data residency and control.

Examining applications further, explainable AI finds critical use in cybersecurity, decision support systems, diagnostic platforms, and predictive analytics, each demanding unique interpretability requirements. This spectrum extends across end-use segments including aerospace and defense, banking, financial services and insurance, energy and utilities, healthcare, IT and telecommunications, media and entertainment, public sector and government, and retail and eCommerce, reflecting the pervasive need for transparency across high-stakes decision environments.

This comprehensive research report categorizes the Explainable AI market into clearly defined segments, providing a detailed analysis of emerging trends and precise revenue forecasts to support strategic decision-making.

- Component

- Methods

- Technology Type

- Software Type

- Deployment Mode

- Application

- End-Use

Uncovering Regional Dynamics in Explainable AI Adoption Trends and Investment Patterns Across Americas, EMEA, and Asia-Pacific Markets

Regional dynamics in explainable AI deployment reveal nuanced strategic priorities driven by regulatory, economic, and innovation imperatives. In the Americas, North American organizations lead global adoption through substantial private and public investments in AI initiatives, benefiting from a mature venture ecosystem and robust research networks. Canada’s federal AI strategy emphasizes ethical frameworks paired with research funding, while the United States pursues a largely market-driven approach that balances voluntary standards with targeted legislative proposals at the state level.

Europe, Middle East and Africa confronts a tightly orchestrated regulatory environment anchored by the EU’s AI Act, which imposes mandatory transparency and risk management obligations for high-risk systems and introduces enforceable codes of practice. Within EMEA, localized adaptations and sectoral guidelines inform regional compliance roadmaps, compelling providers to incorporate explainability by design. Meanwhile, the Asia-Pacific region demonstrates accelerated adoption, driven by national frameworks such as South Korea’s upcoming AI Basic Act and voluntary governance models in Singapore and Japan. Governments across ASEAN are also introducing pilot programs and workforce development initiatives to embed AI literacy in their markets.

This comprehensive research report examines key regions that drive the evolution of the Explainable AI market, offering deep insights into regional trends, growth factors, and industry developments that are influencing market performance.

- Americas

- Europe, Middle East & Africa

- Asia-Pacific

Highlighting Strategic Movements and Innovations from Leading Organizations Shaping the Explainable AI Competitive Landscape in 2025

Leading organizations are distinguishing themselves through strategic integrations of explainability into core AI offerings and through targeted investments in interpretability research. Prominent technology firms have embedded explainability toolkits within their cloud AI platforms, providing native support for feature attribution, counterfactual analysis, and bias detection. Concurrently, enterprise-focused vendors have expanded their portfolios with governance modules that offer automated compliance audits and continuous model monitoring.

Startups and research spin-offs are also reshaping competitive dynamics by specializing in breakthrough interpretability techniques. Constrained Concept Refinement and mechanistic interpretability platforms have demonstrated significant runtime reductions and improved transparency for high-stakes applications, capturing enterprise interest. Funding patterns have underscored this momentum, with pure-play XAI companies raising substantial capital-ranging from multimillion-dollar Series A rounds to billion-dollar strategic investments-reflecting investor confidence in the commercial potential of transparent AI solutions.

This comprehensive research report delivers an in-depth overview of the principal market players in the Explainable AI market, evaluating their market share, strategic initiatives, and competitive positioning to illuminate the factors shaping the competitive landscape.

- Abzu ApS

- Alteryx, Inc.

- ArthurAI, Inc.

- C3.ai, Inc.

- DataRobot, Inc.

- Equifax Inc.

- Fair Isaac Corporation

- Fiddler Labs, Inc.

- Fujitsu Limited

- Google LLC by Alphabet Inc.

- H2O.ai, Inc.

- Intel Corporation

- Intellico.ai s.r.l

- International Business Machines Corporation

- ISSQUARED Inc.

- Microsoft Corporation

- Mphasis Limited

- NVIDIA Corporation

- Oracle Corporation

- Salesforce, Inc.

- SAS Institute Inc.

- Squirro Group

- Telefonaktiebolaget LM Ericsson

- Temenos Headquarters SA

- Tensor AI Solutions GmbH

- Tredence.Inc.

- ZestFinance Inc.

Empowering Industry Leaders with Pragmatic, High-Impact Strategies to Accelerate Adoption and Governance of Explainable AI Solutions

Leaders aiming to capitalize on the explainable AI revolution must adopt a proactive governance framework that integrates transparency as a foundational design principle rather than as a retrospective patch. Building multidisciplinary teams-combining data scientists, ethicists, and domain experts-ensures interpretability requirements are woven into model development lifecycles. Additionally, piloting hybrid approaches that unite data-driven and knowledge-driven methods can deliver robust, user-verifiable explanations that satisfy both technical and regulatory stakeholders.

Investing in interactive explanation interfaces and embedding them within operational workflows enhances stakeholder engagement and accelerates trust-building. Organizations should collaborate with standard-setting consortia and contribute to emerging benchmarking initiatives to align internal metrics with industry best practices. Finally, diversifying hardware sourcing strategies and exploring cloud-based models can mitigate cost volatility stemming from trade policies and tariff fluctuations, ensuring resilient and scalable deployments.

Delineating Rigorous and Transparent Research Methodologies Underpinning the Explainable AI Market Analysis to Ensure Reproducibility and Credibility

The research underpinning this analysis synthesizes extensive secondary research, including regulatory filings, industry publications, and academic journals, alongside in-depth primary insights gathered from expert interviews with AI practitioners, policymakers, and end-user organizations. To validate key findings, a structured survey was conducted among C-suite and technical leaders across multiple sectors, focusing on their adoption drivers, interpretability challenges, and regulatory preparedness.

Quantitative data were cross-verified against publicly available financial disclosures and patent databases, while qualitative perspectives were coded thematically to extract actionable hypotheses. Triangulation between secondary and primary inputs ensured both breadth and depth, enabling a robust and reproducible methodology. Transparency in approach was maintained by documenting data sources, interview protocols, and analytical frameworks, ensuring the credibility and replicability of conclusions presented herein.

This section provides a structured overview of the report, outlining key chapters and topics covered for easy reference in our Explainable AI market comprehensive research report.

- Preface

- Research Methodology

- Executive Summary

- Market Overview

- Market Insights

- Cumulative Impact of United States Tariffs 2025

- Cumulative Impact of Artificial Intelligence 2025

- Explainable AI Market, by Component

- Explainable AI Market, by Methods

- Explainable AI Market, by Technology Type

- Explainable AI Market, by Software Type

- Explainable AI Market, by Deployment Mode

- Explainable AI Market, by Application

- Explainable AI Market, by End-Use

- Explainable AI Market, by Region

- Explainable AI Market, by Group

- Explainable AI Market, by Country

- United States Explainable AI Market

- China Explainable AI Market

- Competitive Landscape

- List of Figures [Total: 19]

- List of Tables [Total: 1590 ]

Consolidating Key Findings to Illuminate the Future Trajectory and Strategic Imperatives in the Evolving Explainable AI Ecosystem

This executive summary consolidates the essential insights required to navigate the evolving explainable AI ecosystem. By illuminating the key technological advancements, regulatory imperatives, tariff impacts, and competitive maneuvers, stakeholders gain a comprehensive understanding of the forces shaping transparency in AI. The integration of interpretability into model design, the emergence of user-centric explanation systems, and the diversification of methodologies underscore a collective shift toward accountable AI.

Looking forward, the strategic imperative is clear: organizations that systematically embed explainability into their AI initiatives will not only satisfy compliance mandates but will also secure enduring trust with customers, regulators, and partners. As market dynamics accelerate, the confluence of technological innovation, governance frameworks, and cost management strategies will define leadership in the transparent intelligence era.

Connect with Ketan Rohom to Unlock Comprehensive Explainable AI Research Insights and Empower Your Organization with Actionable Market Intelligence Today

Engage directly with Ketan Rohom to gain tailored insights that align with your strategic objectives and operational priorities in explainable AI. His seasoned perspective and in-depth understanding of market dynamics will help you navigate complex regulatory environments, optimize your technology investments, and identify the most impactful practical applications for your organization. By partnering with Ketan, you can secure priority access to the comprehensive report on explainable AI, complete with actionable analysis of segmentation, regional trends, competitor activities, and tariff implications. Reach out now to transform this critical intelligence into strategic advantage, and empower your teams to make evidence-based decisions that drive innovation and safeguard trust in AI solutions.

- How big is the Explainable AI Market?

- What is the Explainable AI Market growth?

- When do I get the report?

- In what format does this report get delivered to me?

- How long has 360iResearch been around?

- What if I have a question about your reports?

- Can I share this report with my team?

- Can I use your research in my presentation?