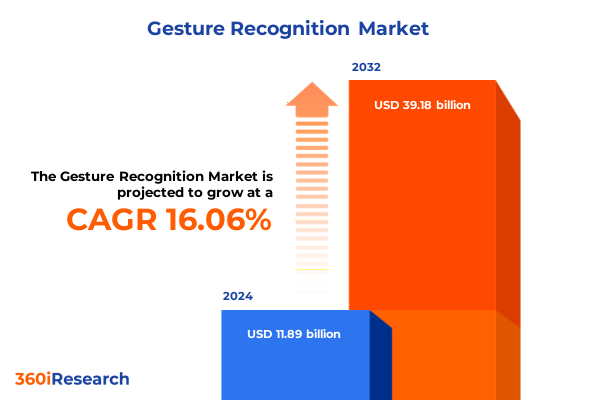

The Gesture Recognition Market size was estimated at USD 13.79 billion in 2025 and expected to reach USD 16.00 billion in 2026, at a CAGR of 16.07% to reach USD 39.18 billion by 2032.

Understanding the Current Momentum of Gesture Recognition Technology and Its Role in Shaping Human-Machine Interaction Across Diverse Industry Verticals

Gesture recognition has emerged as a pivotal facet of the evolving human-machine interface landscape, bridging the physical and digital realms. Over the past decade, innovations have advanced the ability to interpret natural user movements as input signals, fostering more intuitive interactions with devices ranging from smartphones and wearables to automotive systems and industrial machinery. As sensor technologies mature, applications have broadened beyond niche use cases into mainstream deployment, reflecting a growing appetite for seamless, touchless control mechanisms.

The COVID-19 pandemic accelerated the demand for contactless interfaces, spurring investment in gesture-driven control mechanisms across enterprise and consumer domains. Heightened awareness around hygiene, coupled with the shift to remote work and telemedicine, underscored the need for touchless interaction models that preserve safety without compromising functionality. Simultaneously, the proliferation of augmented and virtual reality platforms has elevated consumer expectations for intuitive, immersive controls, placing gesture recognition at the center of next-generation gaming, training simulations, and collaborative virtual environments.

In this dynamic context, understanding the convergence of sensor modalities, artificial intelligence, and system architectures is indispensable for stakeholders aiming to harness gesture recognition’s full potential. Recent breakthroughs in computer vision algorithms, coupled with progress in active infrared, radar, and ultrasonic sensing, have significantly improved accuracy, latency, and environmental robustness. At the same time, software platform innovations have enabled scalable deployment across cloud and on-premise environments, accelerating adoption in consumer electronics, healthcare, and enterprise settings.

This executive summary distills critical developments, structural shifts, and strategic insights shaping the trajectory of gesture recognition technology. Subsequent sections explore transformative industry trends, the ramifications of United States tariffs enacted in 2025, key segmentation perspectives, regional variations in adoption, profiles of leading innovators, actionable recommendations for industry leaders, and the methodological underpinnings of our research. By synthesizing these dimensions, decision makers and technical architects will gain a comprehensive understanding of current drivers, challenges, and future opportunities driving this vibrant market segment.

Exploring Transformational Shifts Fueled by Innovative Sensor Technologies, Advanced AI Algorithms, and New Application Frontiers Elevating User Engagement

Recent years have witnessed a profound evolution in the underlying mechanisms that enable machines to comprehend human gestures, catalyzing transformative shifts in accuracy, responsiveness, and application scope. At the heart of this metamorphosis lies a synergy between sensor technology advancements and the exponential growth of AI processing capabilities. Active infrared arrays have become more compact and power-efficient, enabling precise depth mapping in space-constrained devices, while capacitive touchless sensors leverage minute electric field variations to detect proximity with minimal power draw. In parallel, computer vision frameworks have advanced through convolutional neural networks and transformer architectures, delivering robust recognition even in complex lighting and occlusion scenarios.

Moreover, radar-based sensing has emerged as a game-changing modality, offering exceptional resilience to environmental noise and enabling gesture detection through non-line-of-sight interactions. Ultrasonic sensors, long a niche component in industrial proximity systems, have been miniaturized for integration into consumer electronics, providing cost-effective spatial awareness in cost-sensitive applications. Collectively, these sensor innovations, fused by advanced AI algorithms, have expanded the frontier of possible applications-from cabin control systems in next-generation vehicles to touchless authentication on personal devices.

In addition, the co-design of sensors and software platforms has facilitated the emergence of low-code integration frameworks, empowering developers to embed gesture recognition capabilities with minimal specialized expertise. Industry consortia are defining open standards to promote interoperability, with initiatives focused on unified data formats and cross-platform APIs supporting AR/VR and IoT ecosystems. Edge AI accelerators, such as dedicated neural processing units in mobile and embedded devices, are enabling high-throughput inference, further reducing the barrier to widespread adoption.

Transitioning from technological enablers to ecosystem dynamics, the proliferation of hybrid sensing platforms and standardized software interfaces is fostering cross-sector collaboration. Automotive OEMs, healthcare providers, and entertainment companies are jointly exploring gesture-driven solutions that enhance safety, hygiene, and immersive experiences. As integration frameworks mature, the emphasis is shifting toward optimizing latency, energy efficiency, and privacy, ensuring that gesture recognition becomes a seamless, secure, and indispensable component of tomorrow’s digital interactions.

Analyzing the Impact of United States Tariffs Enacted in 2025 on Gesture Recognition Component Costs, Supply Chains, and Global Competitive Dynamics

In 2025, the United States introduced a new tranche of tariffs targeting select electronic components and consumer devices, with significant implications for gesture recognition supply chains and cost structures. These measures, aimed primarily at imports from key manufacturing hubs, have elevated duties on sensors, imaging modules, and system components essential to gesture interface solutions. As component costs have risen, original equipment manufacturers and system integrators have faced increased pressure on margins, prompting many to reassess supplier portfolios and procurement strategies.

The cumulative effect of these tariffs has been a shift in supply chain strategies toward diversification and nearshoring. Companies are increasingly relocating critical manufacturing and assembly operations to regions with favorable trade agreements or domestic facilities to mitigate duty burdens. While this transition entails upfront capital investments and operational reconfiguration, it also presents opportunities to enhance supply resiliency, reduce lead times, and bolster compliance with emerging data sovereignty regulations. However, smaller entrants and startups with limited sourcing flexibility may encounter barriers to entry, potentially consolidating market power among established players with greater financial and logistical resources.

Government incentives such as the CHIPS and Science Act and infrastructure funding programs are providing grants and tax credits to support domestic semiconductor and sensor manufacturing, mitigating some cost increases from tariffs and encouraging innovation within the United States. These policy levers, combined with collaborative R&D incentives, are catalyzing investments in localized production facilities and advanced packaging techniques. Although end users may bear a portion of the increased costs through end-product pricing, the push toward localized production and innovative supply chain financing is likely to yield long-term efficiencies and foster greater vertical integration across the gesture recognition ecosystem.

Looking ahead, the tariff-driven cost recalibrations are expected to influence pricing strategies and partnership models. Strategic alliances between component vendors and system developers are on the rise, enabling collaborative cost-sharing mechanisms and joint investment in optimized manufacturing processes.

Unveiling Critical Insights from Technology, Product, Deployment Mode, Application, and End User Segmentation to Decode Gesture Recognition Adoption Patterns

An in-depth examination of gesture recognition deployment reveals nuanced adoption patterns when evaluated through multiple segmentation lenses. Based on technology, the landscape is defined by distinct sensor modalities-active infrared excels in dense depth mapping within constrained spaces; capacitive systems offer low-power proximity detection; computer vision frameworks provide high-fidelity gesture analysis under diverse lighting; radar sensors enable robust non-line-of-sight recognition; and ultrasonic solutions deliver economical spatial awareness in cost-sensitive applications. Each modality brings unique performance characteristics that inform design trade-offs and implementation strategies.

Turning to product segmentation, core components such as specialized sensors, comprehensive software platforms, and integrated wearables and devices form the backbone of gesture ecosystems. Sensor manufacturers are investing heavily in miniaturization and sensitivity enhancements, while platform vendors are focusing on modular APIs, cross-platform compatibility, and ease of integration. Wearables and devices, ranging from gesture-enabled earbuds to smart gloves, are driving user-centric experiences that blend hardware precision with software intelligence.

Deployment mode further delineates the market into cloud-based frameworks and on-premise installations. Cloud architectures offer scalability, continuous model refinement, and streamlined updates, yet must address latency and data privacy concerns; conversely, on-premise solutions deliver predictable performance and enhanced control but may require dedicated infrastructure and specialized maintenance.

When considering application segmentation, automotive systems leverage gesture control for cabin adjustments, infotainment and telematics management, and advanced safety and ADAS functions; consumer electronics encompass AR/VR headsets, smart TVs and monitors, smartphones and tablets, and wearable devices; gaming and entertainment experiences are redefined by immersive, touchless interfaces; healthcare implementations support sterile operation and patient monitoring; and industrial contexts apply gesture inputs for machinery control and safety compliance.

Finally, end user segmentation distinguishes enterprise deployments, where integration with existing IT landscapes and cross-functional workflows is paramount, from individual consumer adoption, which prioritizes ease of use, aesthetics, and seamless interoperability with personal devices. These segmentation insights collectively illuminate the diverse requirements and value propositions driving gesture recognition innovation.

This comprehensive research report categorizes the Gesture Recognition market into clearly defined segments, providing a detailed analysis of emerging trends and precise revenue forecasts to support strategic decision-making.

- Technology

- Product

- Deployment Mode

- Application

- End User

Dissecting Regional Dynamics Across the Americas, Europe Middle East and Africa, and Asia-Pacific to Illuminate Strategic Opportunities in Gesture Recognition

Geographic factors play a critical role in shaping gesture recognition trajectories, with distinct dynamics emerging across the Americas, Europe Middle East & Africa, and Asia-Pacific. In the Americas, a mature technology ecosystem and strong venture capital presence are catalyzing rapid adoption in consumer electronics and automotive sectors. The region’s robust digital infrastructure and advanced regulatory frameworks have facilitated pilot programs in touchless vehicle control and immersive entertainment, setting precedents for global best practices.

Moving to Europe Middle East & Africa, regulatory emphasis on data privacy and stringent compliance standards have driven demand for on-premise solutions in healthcare and industrial automation. Several European consortia are collaborating on unified gesture interface protocols to ensure interoperability and safety, particularly within automotive assembly lines and medical devices. Meanwhile, emerging economies in the Middle East and Africa are prioritizing gesture-enabled user experiences in public services and smart city applications, leveraging partnerships between local governments and international technology providers.

In the Asia-Pacific region, a combination of expansive manufacturing capabilities and proactive government initiatives has positioned several countries at the forefront of next-generation gesture recognition R&D. Strategic investments in semiconductor fabrication and AI research have accelerated the deployment of advanced sensor arrays in consumer electronics hubs and smart factories. High population density and digital adoption rates have also spurred innovative use cases in retail, education, and mobile gaming, making Asia-Pacific a hotbed for scalable demonstrations and rapid commercialization. Region-specific regulatory incentives, such as subsidies for Industry 4.0 implementations, further underscore the strategic value of this market as a bellwether for global gesture recognition advancements.

This comprehensive research report examines key regions that drive the evolution of the Gesture Recognition market, offering deep insights into regional trends, growth factors, and industry developments that are influencing market performance.

- Americas

- Europe, Middle East & Africa

- Asia-Pacific

Profiling Leading Innovators, Strategic Partnerships, and Technological Pioneers Shaping the Future Trajectory of Gesture Recognition Via Collaborative Ecosystems

Leading technology companies and agile startups are fiercely competing to define the future of gesture recognition, each leveraging distinct strengths in sensor development, algorithmic innovation, and ecosystem partnerships. Established semiconductor and processor manufacturers have expanded their sensor portfolios to include high-resolution depth cameras and radar modules tailored for gesture capture. Chip vendors are integrating dedicated signal processing units and AI accelerators to optimize real-time inference on edge devices.

For instance, Ultraleap’s hand-tracking modules have achieved sub-millimeter precision, while Qualcomm’s Snapdragon platforms incorporate gesture recognition frameworks optimized for mobile devices. Google’s Soli radar sensor has paved the way for micro-gesture interaction on wearable form factors, and leading vision sensor providers have launched AI-powered camera modules for automotive and industrial applications.

Software platform specialists are forging alliances with cloud service providers and original equipment manufacturers to deliver modular gesture recognition engines, complete with SDKs, developer tools, and pre-trained models. Collaborative ecosystems spanning hyperscale clouds, application developers, and device integrators ensure that gesture capabilities can be seamlessly embedded in a wide array of hardware form factors.

Prominent startups are advancing niche solutions, such as ultra-low-power capacitive sensing arrays for wearable health monitors and compact ultrasonic transceivers for smart home controllers. Mergers and acquisitions have also shaped the landscape, with larger entities incorporating specialized technology firms to bolster end-to-end gesture offerings. Strategic joint ventures between automotive OEMs and sensor innovators have yielded bespoke in-cabin control systems, while partnerships between consumer electronics brands and AI research labs have accelerated gesture-driven user interface enhancements. This vibrant tapestry of competition and cooperation is fueling a rapidly evolving value chain, characterized by continuous iteration, cross-domain synergies, and a relentless drive toward more natural, intuitive human-machine dialogues.

This comprehensive research report delivers an in-depth overview of the principal market players in the Gesture Recognition market, evaluating their market share, strategic initiatives, and competitive positioning to illuminate the factors shaping the competitive landscape.

- Apple Inc.

- Cipia Vision Ltd.

- Cognitec Systems GmbH

- Elliptic Laboratories

- ESPROS Photonics Corporation

- Fibaro Group SA

- Google LLC by Alphabet Inc.

- HID Global Corporation by ASSA ABLOY

- Infineon Technologies AG

- iProov Ltd.

- IrisGuard Ltd.

- Jabil Inc.

- KaiKuTeK by JMicron Technology Corporation

- Microchip Technology Inc.

- Microsoft Corporation

- Nimble VR by Oculus

- Oblong Industries Inc.

- OmniVision Technologies, Inc.

- PISON Technology

- Pmdtechnologies AG

- Qualcomm Incorporated

- Sony Corporation

- Synaptics Inc.

- Ultraleap Ltd.

- Visteon Corp.

Strategic Imperatives for Industry Leaders to Capitalize on Emerging Gesture Recognition Trends and Navigate Supply Chain, Regulatory, and Integration Challenges

To maintain a competitive edge in the evolving gesture recognition arena, industry leaders must adopt a multifaceted strategy that addresses technological, operational, and regulatory dimensions. First, investing in sensor fusion research and advanced signal processing techniques will enable the development of hybrid systems that combine the strengths of infrared, radar, and computer vision modalities, delivering superior accuracy and robustness across diverse environments. Complementary investments in AI model optimization and edge computing architectures will further reduce latency and energy consumption, enhancing user experiences.

Second, diversifying supply chains through partnerships with alternate component suppliers and exploring nearshore manufacturing options can mitigate tariff-related cost pressures and reinforce operational resilience. Proactively collaborating with logistics experts and legal advisors will help anticipate regulatory changes and streamline compliance with evolving trade policies. Third, engaging in industry consortiums and standards bodies will promote interoperability, accelerate ecosystem development, and facilitate cross-sector deployments, particularly in automotive and healthcare verticals where safety and reliability are paramount.

Additionally, prioritizing privacy engineering and compliance with legislation such as GDPR in Europe and CCPA in California will not only mitigate legal risks but also strengthen brand trust. Establishing partnerships with cybersecurity experts can further safeguard gesture data streams against potential threats. Furthermore, forging alliances with cloud service providers and platform integrators can expand distribution channels and foster co-innovation in scalable, cloud-native gesture recognition solutions. By executing on these strategic imperatives, organizations can not only overcome immediate challenges but also position themselves to lead the next wave of gesture-enabled innovation.

Detailing a Robust Research Framework Incorporating Primary Expert Interviews, Secondary Data Intelligence, Triangulation Techniques, and Validation Protocols

To ensure the validity and reliability of insights presented in this executive summary, a rigorous research methodology was employed, combining both primary and secondary intelligence gathering techniques. Primary research involved in-depth interviews with sensor technology experts, AI algorithm developers, system integrators, and end user stakeholders across key industry verticals. These discussions provided qualitative perspectives on emerging use cases, performance requirements, and strategic priorities.

Secondary research encompassed the systematic review of technical white papers, academic publications, industry standards documentation, and conference proceedings. Data from publicly disclosed corporate reports and regulatory filings were analyzed to understand recent product launches, patent trends, and partnership announcements. Quantitative data points, such as adoption rates and investment flows, were triangulated using multiple independent sources to validate consistency and mitigate bias.

Quantitative surveys with a representative sample of enterprise IT decision makers and individual consumers were conducted to gauge adoption readiness and preference drivers. Statistical analysis techniques, including regression analysis and cluster segmentation, were applied to identify significant correlations between application contexts and technology preferences. Additionally, AI-driven text mining of patent databases and real-time news feeds enriched trend identification, ensuring that emerging breakthroughs and competitive movements are captured comprehensively.

Throughout the process, a structured data validation protocol was maintained, featuring cross-verification of interview findings with documented evidence, peer review by industry advisors, and an iterative feedback loop to refine key themes. This layered approach ensured that conclusions and recommendations reflect a balanced view of technical feasibility, ecosystem dynamics, and strategic imperatives, providing decision makers with high-confidence guidance.

This section provides a structured overview of the report, outlining key chapters and topics covered for easy reference in our Gesture Recognition market comprehensive research report.

- Preface

- Research Methodology

- Executive Summary

- Market Overview

- Market Insights

- Cumulative Impact of United States Tariffs 2025

- Cumulative Impact of Artificial Intelligence 2025

- Gesture Recognition Market, by Technology

- Gesture Recognition Market, by Product

- Gesture Recognition Market, by Deployment Mode

- Gesture Recognition Market, by Application

- Gesture Recognition Market, by End User

- Gesture Recognition Market, by Region

- Gesture Recognition Market, by Group

- Gesture Recognition Market, by Country

- United States Gesture Recognition Market

- China Gesture Recognition Market

- Competitive Landscape

- List of Figures [Total: 17]

- List of Tables [Total: 1272 ]

Synthesizing Key Gesture Recognition Milestones, Strategic Imperatives, and Industry Outlook to Illuminate the Path Forward for Decision Makers

The convergence of advanced sensor modalities, AI-driven processing, and evolving deployment frameworks is redefining the gesture recognition landscape, elevating it from niche experimentation to strategic imperative. Key milestones-from the miniaturization of depth sensors and the advent of radar-based non-line-of-sight detection to the rise of cloud-native model orchestration-have collectively expanded the frontiers of what gesture interfaces can achieve. Strategic participation in standardization efforts and collaborative R&D initiatives has further accelerated cross-industry adoption, paving the way for interoperable, secure, and user-centric solutions.

Concurrently, regulatory shifts, notably the 2025 tariff adjustments, have prompted a realignment of supply chains, catalyzing nearshoring and cost-sharing partnerships that enhance operational resilience. Segmentation analysis underscores the importance of tailoring gesture recognition solutions to specific technology stacks, product categories, deployment modes, application use cases, and end user requirements. Regional insights reveal that while developed markets continue to spearhead innovation, emerging economies are rapidly embracing gesture technologies across consumer electronics, healthcare, and smart city deployments.

Looking forward, convergence with brain-computer interfaces and robotics control systems represents a frontier in gesture-driven interactions, while the development of edge-cloud continuum architectures will further optimize performance and privacy trade-offs. Stakeholders who proactively explore such emerging paradigms are poised to lead in the next phase of gestural computing evolution. For decision makers and technical architects, these insights outline a clear roadmap: invest in sensor fusion, diversify supply chains, contribute to ecosystem standards, and uphold data privacy principles. By synthesizing these strategic imperatives with robust methodological underpinnings, organizations are well positioned to harness the transformative power of gesture recognition as a cornerstone of future human-machine interaction.

Engage with Associate Director of Sales & Marketing Ketan Rohom to Gain Exclusive Gesture Recognition Insights and Drive Strategic Growth Initiatives Today

Explore how advanced gesture recognition capabilities can transform your product portfolio and user experiences by engaging directly with Ketan Rohom, Associate Director of Sales & Marketing. Ketan brings deep expertise in guiding organizations through market entry strategies, solution integration, and strategic partnerships within the gesture recognition domain. By connecting with Ketan, you will gain tailored insights into the latest technology developments, supply chain considerations, and application opportunities spanning automotive, consumer electronics, healthcare, and industrial sectors.

Leverage this opportunity to discuss your specific challenges, evaluate bespoke service offerings, and secure a competitive edge with comprehensive intelligence. Reach out to Ketan to discover how your organization can capitalize on emerging gesture-driven innovations and drive strategic growth initiatives today.

Contact Ketan to schedule a personalized consultation and receive an overview of the full suite of research deliverables designed to support your strategic planning and technology roadmap. Drive your next wave of innovation with confidence and clarity.

- How big is the Gesture Recognition Market?

- What is the Gesture Recognition Market growth?

- When do I get the report?

- In what format does this report get delivered to me?

- How long has 360iResearch been around?

- What if I have a question about your reports?

- Can I share this report with my team?

- Can I use your research in my presentation?