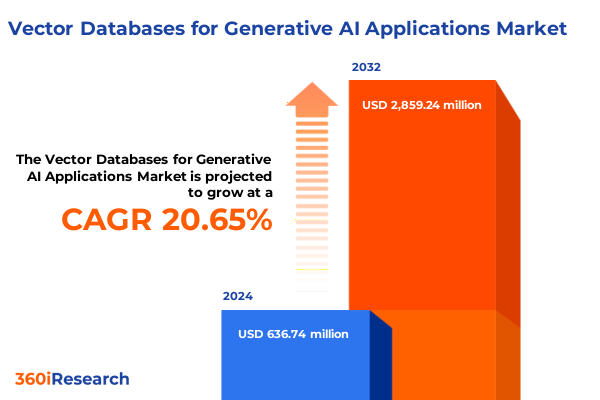

The Vector Databases for Generative AI Applications Market size was estimated at USD 759.89 million in 2025 and expected to reach USD 909.67 million in 2026, at a CAGR of 20.84% to reach USD 2,859.24 million by 2032.

Exploring the Emergence and Strategic Importance of Vector Databases in Powering Next-Generation Generative AI Experiences Across Industries

The rapid advancement of generative AI models has brought vector databases from niche research projects into the mainstream of enterprise IT strategies. Early adopters recognized that embedding-based architectures require specialized storage and retrieval systems capable of handling high-throughput similarity searches while ensuring low latency. As organizations across diverse sectors began experimenting with large language models, image synthesis engines, and recommendation systems, it became clear that traditional relational databases and document stores could not meet the performance demands of real-time inference. Vector databases emerged as the essential infrastructure layer to bridge this gap, providing scalable support for embedding vectors and enabling applications to deliver contextual, responsive experiences.

In recent years, improvements in GPU acceleration, the proliferation of open source embedding frameworks, and tighter integration with popular machine learning platforms have all converged to accelerate the adoption curve of vector databases. Cloud providers introduced managed services that abstract away complex indexing and partitioning tasks, democratizing access for organizations without deep infrastructure expertise. Meanwhile, proprietary vendors have differentiated their offerings through advanced features such as multi-modal search, dynamic index updates, and end-to-end data encryption. As a result, enterprises are now evaluating vector databases not merely as experimental add-ons but as critical components of their data architecture, essential for powering next-generation AI-driven applications.

Looking ahead, the capacity to support billions of high-dimensional embeddings efficiently is becoming a competitive differentiator. Companies that architect their AI systems with a robust vector storage layer are positioned to innovate more rapidly, delivering personalized interactions, real-time insights, and adaptive workflows at scale. This introduction lays the foundation for understanding how vector databases have evolved to become the backbone of generative AI deployments, setting the stage for a deeper exploration of the landscape’s most transformative shifts.

Unveiling the Technological and Market Shifts Driving the Rapid Adoption of Vector Databases for Generative AI Workloads Worldwide

The vector database landscape has undergone several transformative shifts as enterprises transition from proof-of-concepts to production-grade AI systems. Initially, academic research emphasized algorithmic efficiency, leading to the development of approximate nearest neighbor (ANN) techniques that drastically reduce search times for high-dimensional vectors. These algorithmic breakthroughs laid the groundwork for open source projects, making sophisticated indexing strategies accessible to a broader developer community. Subsequently, the rapid expansion of generative AI use cases-ranging from personalized content generation to real-time anomaly detection-drove demand for systems that could handle dynamic data updates without compromising performance.

Concurrently, the integration between vector databases and cloud-native infrastructure emerged as a critical enabler. Containerization and serverless architectures allowed organizations to deploy vector stores alongside machine learning inference pipelines, reducing data movement and operational overhead. At the same time, advancements in hardware acceleration, including GPU- and FPGA-based indexing engines, have further compressed query latencies and optimized resource utilization. As a result, vector databases have shifted from monolithic, on-prem indexers to distributed, elastic services capable of scaling in tandem with unpredictable workload spikes.

Moreover, the convergence of vector search with complementary technologies-such as similarity graph traversal, hybrid search combining traditional keyword matching with embedding retrieval, and real-time analytics-has broadened the applicability of vector databases. These innovations are redefining how organizations architect AI solutions, prompting a paradigm shift from batch inference to interactive, conversational workflows. Taken together, these technological and market shifts are revolutionizing enterprise data architectures, making vector databases an indispensable foundation for the next wave of generative AI applications.

Assessing the Cumulative Impact of 2025 United States Tariffs on Hardware Supply Chains and Vector Database Deployment for AI Applications

In 2025, changes to United States trade policy introduced new tariffs on critical hardware components, including specialized AI accelerators and memory modules that are integral to high-performance vector database deployments. These increased import duties have led to higher capital expenditures for organizations sourcing on-premise servers and for cloud providers that rely on international hardware supply chains. As a result, procurement teams are re-evaluating their vendor agreements, opting for regional manufacturing partnerships where possible and exploring alternative hardware architectures that can deliver comparable throughput at lower duty rates.

The ripple effects of these tariffs have also influenced cloud service pricing models. Major cloud platforms have adjusted instance costs to reflect the higher underlying infrastructure expenses, passing a portion of the tariff burden onto end customers. This, in turn, has prompted enterprises to assess hybrid strategies, shifting some compute-intensive indexing workloads back to localized data centers to leverage existing hardware inventory and avoid incremental import costs. In parallel, proprietary vector database providers are deepening partnerships with domestic OEMs to secure tariff-exempt supply, while open source projects are gaining traction among budget-conscious organizations seeking to minimize dependency on specialized hardware.

Despite these headwinds, the new tariff landscape has accelerated innovation in software-based optimization techniques. Frameworks now offer more efficient compression schemes, adaptive sharding mechanisms, and cost-aware query planners that reduce overall hardware footprints. As enterprises navigate this evolving policy environment, those that successfully balance performance requirements with hardware cost management will achieve a sustainable competitive advantage in deploying scalable, maintainable vector database infrastructures.

Deriving Strategic Insights from Comprehensive Segmentation by Database Type Data Categories Techniques Deployment and Industry Verticals

A nuanced analysis of market segmentation reveals distinct adoption patterns across database types, data categories, technical approaches, deployment models, and industry verticals. When considering open source versus proprietary offerings, organizations with in-house engineering expertise typically gravitate toward community-driven solutions to capitalize on flexibility and lower licensing overhead, while entities requiring robust vendor support and service level agreements favor proprietary platforms. In terms of data type stored, use cases that process image embeddings for computer vision tasks often demand vector stores optimized for higher dimensionality, whereas speech and audio retrieval applications emphasize real-time indexing and dynamic updates. Text-based use cases, including document search and conversational AI, frequently adopt hybrid architectures that combine traditional inverted indices with vector-based similarity search.

The choice of similarity search, vector indexing, or vector storage techniques further differentiates deployments. Organizations prioritizing blazing-fast query responses often integrate advanced approximate nearest neighbor algorithms, while those with large embedding footprints might emphasize storage efficiency and partitioning strategies. Deployment mode decisions, between cloud and on-premise, reflect organizational preferences for operational control versus elasticity. Enterprises in regulated industries may choose on-premise setups to satisfy data sovereignty requirements, whereas cloud-native companies leverage managed services for rapid scaling.

Finally, industry verticals exhibit tailored adoption curves. Automotive firms leverage vector databases for sensor fusion and autonomous driving data ingestion, banking and financial services institutions exploit embedding retrieval for fraud detection and customer segmentation, healthcare organizations implement multimodal search for medical imaging and EHR integration, IT & telecom providers deploy recommendation engines to personalize network optimization, manufacturing companies optimize supply chain analytics, and retail players enhance product discovery and virtual merchandising. Within the BFSI segment, asset management firms focus on portfolio risk analysis, banks on real-time transaction monitoring, and insurance companies on claims adjudication powered by similarity search.

This comprehensive research report categorizes the Vector Databases for Generative AI Applications market into clearly defined segments, providing a detailed analysis of emerging trends and precise revenue forecasts to support strategic decision-making.

- Database Type

- Data Type Stored

- Technique

- Deployment Mode

- Industry

Analyzing Regional Dynamics Shaping Vector Database Adoption Across Americas Europe Middle East Africa and Asia Pacific Markets

The Americas region maintains a leadership position in vector database adoption, driven by early investments in AI research, extensive cloud infrastructure, and a concentration of hyperscale technology providers. North American enterprises are pushing the envelope on real-time recommendation systems and conversational AI, leveraging advanced vector indexing to differentiate customer experiences. In addition, strong venture capital funding has fueled the growth of specialized startups, creating a vibrant ecosystem of niche solutions and integrators.

In Europe, the Middle East, and Africa, regulatory frameworks around data privacy and cross-border data flows have shaped vector database strategies. Organizations in EMEA often deploy on-premise or hybrid configurations to comply with stringent sovereignty requirements, while still embracing cloud-native efficiencies through regionally operated data centers. Localized use cases, such as personalized healthcare diagnostics and smart manufacturing initiatives, are driving demand for high-precision embedding retrieval within controlled data environments.

Asia-Pacific exhibits the fastest growth trajectory, underpinned by aggressive AI infrastructure investments in China, India, Japan, and South Korea. Enterprises in this region are rapidly adopting vector databases for large-scale language models, real-time video analytics, and next-generation consumer platforms. Government-sponsored AI programs and strong partnerships between local cloud providers and hardware manufacturers have lowered the barrier to entry, enabling organizations to deploy vector search capabilities at unprecedented scales. Across all regions, the interplay between regulatory considerations, infrastructure maturity, and local innovation ecosystems continues to influence the shape and pace of vector database adoption.

This comprehensive research report examines key regions that drive the evolution of the Vector Databases for Generative AI Applications market, offering deep insights into regional trends, growth factors, and industry developments that are influencing market performance.

- Americas

- Europe, Middle East & Africa

- Asia-Pacific

Highlighting Leading Vector Database Providers and Their Differentiated Strategies in the Generative AI Ecosystem

Leading providers in the vector database space have differentiated themselves through unique value propositions and strategic partnerships. Pinecone has gained prominence for its fully managed, serverless service that abstracts the complexities of index administration and scaling. By focusing on seamless integration with popular machine learning frameworks, it attracts organizations looking to rapidly prototype AI search capabilities without deep infrastructure investment. Similarly, Weaviate has distinguished itself by offering an open source, graph-aware vector store that couples semantic search with knowledge graph functionality, appealing to enterprises that require contextual reasoning alongside similarity retrieval.

Zilliz’s Milvus project has garnered attention for its high-performance, container-native architecture, enabling easy deployment in Kubernetes environments. Its active open source community and compatibility with diverse storage backends position it as an agile choice for teams with hybrid infrastructure requirements. Qdrant, another open source contender, emphasizes advanced filtering and payload-aware search, catering to applications that need fine-grained control over vector result sets. On the proprietary front, Redis Labs has introduced vector search modules within its in-memory data platform, offering sub-millisecond retrieval for latency-sensitive tasks, while Vespa continues to evolve its enterprise-grade solution with built-in relevance tuning and real-time analytics.

These companies are further differentiated by their approaches to partnerships and ecosystem support. Collaborations with hyperscale cloud providers, integration with DevOps toolchains, and joint go-to-market initiatives with AI platform vendors have become key tactics for scaling adoption. As competition intensifies, providers are investing in advanced features such as multi-modal retrieval, dynamic index rebalancing, and end-to-end security certifications to win enterprise trust and capture emerging use cases.

This comprehensive research report delivers an in-depth overview of the principal market players in the Vector Databases for Generative AI Applications market, evaluating their market share, strategic initiatives, and competitive positioning to illuminate the factors shaping the competitive landscape.

- Algolia

- Amazon Web Services, Inc.

- Azumo LLC.

- Chroma, Inc.

- Cloudelligent LLC

- Cortical.io

- Couchbase, Inc.

- Coveo Solutions Inc.

- Cyfuture India Pvt. Ltd.

- Dataiku

- DataStax, Inc.

- Elasticsearch B.V.

- FD Technologies PLC

- Google LLC by Alphabet Inc.

- International Business Machines Corporation

- Kinetica DB Inc

- LanceDB Systems, Inc.

- Lucidworks

- Microsoft Corporation

- Milvus

- MindsDB

- Mission Cloud Services Inc.

- MongoDB, Inc.

- Okoone Ltd.

- Oracle Corporation

- Pinecone Systems, Inc.

- Qdrant Solutions GmbH

- Redis Ltd.

- SeMI Technologies B.V.

- SingleStore, Inc.

- Snowflake, Inc.

- Supabase Inc

- Teradata

- The PostgreSQL Global Development Group

- Vespa.ai AS

- Weaviate

- YugabyteDB, INC

- Zilliz Limited

Formulating Actionable Strategic Recommendations for Industry Leaders to Accelerate Vector Database Integration in Generative AI Solutions

Industry leaders should begin by evaluating vector database solutions against their current AI infrastructure, ensuring compatibility with existing embedding pipelines and inference frameworks. Prioritizing platforms that offer flexible deployment models will allow organizations to balance performance requirements with regulatory and cost considerations. Next, it is critical to pilot both open source and proprietary options in controlled environments, measuring key metrics such as query latency, index update performance, and operational overhead to derive data-driven vendor selection criteria.

As a complementary step, enterprises should forge strategic partnerships with hardware providers to secure optimized compute resources, leveraging co-engineering agreements that can mitigate the impact of tariff-driven cost increases. Investing in upskilling internal teams on vector search techniques and advanced indexing algorithms will unlock the full potential of these platforms. To promote cross-functional alignment, leaders must establish governance frameworks that integrate data quality, security, and compliance requirements into the vector database lifecycle-from dataset curation through to model inference.

Finally, organizations should adopt an iterative optimization approach, leveraging production metrics and real-time monitoring to continuously refine index structures and query strategies. By treating the vector database as a living component of the AI stack rather than a one-time deployment, teams can adapt to shifting workload patterns, evolving user expectations, and emerging use cases. This proactive stance will ensure that vector search infrastructure remains a catalyst for innovation rather than a performance bottleneck.

Outlining the Rigorous Research Methodology and Analytical Framework Underpinning This Comprehensive Vector Database Market Study

This research employs a blended methodology combining primary and secondary data sources to deliver a comprehensive view of the vector database market. Primary data collection involved in-depth interviews with enterprise practitioners, technology architects, and vendor executives to capture real-world deployment experiences, infrastructure challenges, and feature priorities. These qualitative insights were further validated through a series of surveys targeting key end-user segments across industries such as automotive, finance, healthcare, and retail.

Secondary research drew upon a wide array of technical white papers, standards documentation, and publicly available product benchmarks to analyze performance characteristics, architectural variations, and feature sets across leading vector database offerings. Industry consortium reports and academic publications provided additional context on algorithmic developments in approximate nearest neighbor search, compression techniques, and hardware accelerators. To ensure the reliability of findings, data triangulation was conducted by cross-referencing vendor disclosures, customer case studies, and third-party performance tests.

The analytical framework incorporated a multi-dimensional segmentation approach, examining database type, data category, technique, deployment mode, and industry vertical to surface differentiated adoption patterns. Regional dynamics were assessed by mapping infrastructure maturity, regulatory environments, and ecosystem partnerships. Finally, the research team conducted a series of validation workshops with subject matter experts to refine insights and recommendations, ensuring the report’s conclusions are grounded in both empirical evidence and practical relevance.

This section provides a structured overview of the report, outlining key chapters and topics covered for easy reference in our Vector Databases for Generative AI Applications market comprehensive research report.

- Preface

- Research Methodology

- Executive Summary

- Market Overview

- Market Insights

- Cumulative Impact of United States Tariffs 2025

- Cumulative Impact of Artificial Intelligence 2025

- Vector Databases for Generative AI Applications Market, by Database Type

- Vector Databases for Generative AI Applications Market, by Data Type Stored

- Vector Databases for Generative AI Applications Market, by Technique

- Vector Databases for Generative AI Applications Market, by Deployment Mode

- Vector Databases for Generative AI Applications Market, by Industry

- Vector Databases for Generative AI Applications Market, by Region

- Vector Databases for Generative AI Applications Market, by Group

- Vector Databases for Generative AI Applications Market, by Country

- United States Vector Databases for Generative AI Applications Market

- China Vector Databases for Generative AI Applications Market

- Competitive Landscape

- List of Figures [Total: 17]

- List of Tables [Total: 1113 ]

Concluding Remarks on the Strategic Imperatives and Future Trajectory of Vector Databases in Fueling Generative AI Innovation

Vector databases have progressed from experimental research tools to mission-critical infrastructure components that underpin the most advanced generative AI applications. Their ability to provide efficient, scalable storage and retrieval of high-dimensional embeddings is transforming how organizations approach search, recommendation, and conversational interfaces. As we have explored, technological innovations in indexing algorithms, cloud integration, and hardware optimization, combined with evolving regulatory and tariff landscapes, are collectively shaping a dynamic ecosystem of solutions and strategies.

The insights derived from comprehensive segmentation reveal that no single deployment model or technical approach suits every use case; rather, successful adopters tailor their vector database strategies to specific data types, performance requirements, and compliance mandates. Regional considerations further underscore the importance of aligning infrastructure choices with local regulations and ecosystem capabilities. Meanwhile, leading providers continue to differentiate through partnerships, feature enhancements, and ecosystem contributions.

In closing, the vector database market stands at a pivotal juncture where strategic decisions regarding platform selection, deployment architecture, and optimization processes will determine whether organizations can fully harness the power of generative AI. By understanding the market’s key drivers, segmentation dynamics, and regional nuances, decision-makers are equipped to craft robust, adaptive solutions that deliver sustained innovation and competitive advantage.

Engage Directly with Our Associate Director of Sales and Marketing to Secure Your Comprehensive Vector Database Market Research Report

If you’re seeking to deepen your understanding of vector database dynamics and uncover strategic pathways for deploying advanced AI architectures, reach out to Ketan Rohom, the Associate Director of Sales & Marketing. With extensive expertise in enterprise analytics and AI infrastructure, Ketan can guide you through the detailed insights and customized recommendations contained within the complete report. Engage directly to arrange a personalized briefing, explore tailored licensing options, and secure a comprehensive roadmap for leveraging vector databases to their fullest potential. This conversation will not only clarify how the latest technological shifts and regulatory factors impact your specific environment but also offer you a clear action plan for accelerating innovation within your organization. Connect today to ensure you have the authoritative intelligence needed to stay ahead in a fast-evolving generative AI ecosystem, and to make informed, strategic decisions that drive sustainable competitive advantage

- How big is the Vector Databases for Generative AI Applications Market?

- What is the Vector Databases for Generative AI Applications Market growth?

- When do I get the report?

- In what format does this report get delivered to me?

- How long has 360iResearch been around?

- What if I have a question about your reports?

- Can I share this report with my team?

- Can I use your research in my presentation?